Chapter 3 Linear Models

As we all know, linear models are the workhorse of statistical modeling. These are ubiquitous across disciplines whether in the OLS regression framework or in a classical ANOVA framework. Here, we’re going to focus on the OLS regression framework to highlight lots of what you can do with linear models in R. We will work with the COVID-19 data from Colorado that we worked with before.

library(dplyr)

load("data/colo_dat.rda")

colo_dat <- colo_dat %>%

group_by(county) %>%

summarise_all(.fun=last) %>%

mutate(cases_pc = cases*10000/tpop)There are a few things worth noting here. First, as discussed previously, the main argument here is a formula where the outcome variable is on the left-hand side of the tilde (~) and the explanatory variables are on the right-hand side of the tilde. They can be separated by plus signs if an additive model is desired or with asterisks if the effect of the variables is conditional on each other. We will see an example of this below.

mod <- lm(log(cases_pc) ~ over60_pop, data=colo_dat)

summary(mod)##

## Call:

## lm(formula = log(cases_pc) ~ over60_pop, data = colo_dat)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.72413 -0.52199 -0.00148 0.50718 2.26610

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -12.380 5.290 -2.340 0.02256 *

## over60_pop 24.320 8.338 2.917 0.00494 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.8475 on 61 degrees of freedom

## Multiple R-squared: 0.1224, Adjusted R-squared: 0.108

## F-statistic: 8.507 on 1 and 61 DF, p-value: 0.004944The stargazer package allows us to export model results in a way that is nicely presented in an RMarkdown document, a LaTeX document or to word through html.

library(stargazer)

stargazer(mod, type="html",

style="ajps",

covariate.labels=c(

"Proportion over 60",

"Intercept"),

star.cutoffs=.05,

star.char = "`*`",

notes="`*` p < .05",

notes.append=FALSE)| log(cases_pc) | |

| Proportion over 60 |

24.320*

|

| (8.338) | |

| Intercept |

-12.380*

|

| (5.290) | |

| N | 63 |

| R-squared | 0.122 |

| Adj. R-squared | 0.108 |

| Residual Std. Error | 0.847 (df = 61) |

| F Statistic |

8.507* (df = 1; 61)

|

* p < .05

|

|

To include in a word document, you could do the following:

cat(

stargazer(mod, type="html",

style="ajps",

covariate.labels=c(

"Proportion over 60",

"Intercept"),

star.cutoffs=.05,

star.char = "`*`",

notes="`*` p < .05",

notes.append=FALSE),

file="table.html")Then you could choose Insert \(\rightarrow\) File… and browse to the file table.html that you just created. This will bring the table into your Word document nicely formatted. Alternatively, you could write in RMarkdown and knit to a Word document.

Now, back to the model. In the above, we know that for every 1 unit change in over60_pop (the proportion of the population over 60 years old), that the log of cases per 10,000 people goes up by 24 units. This is a huge change on the log scale, but a one-unit change is also huge. It represents changing from a county with no people over 60 years old to a county that has only people over 60 years old. In reality, the range of the over60_pop variable is:

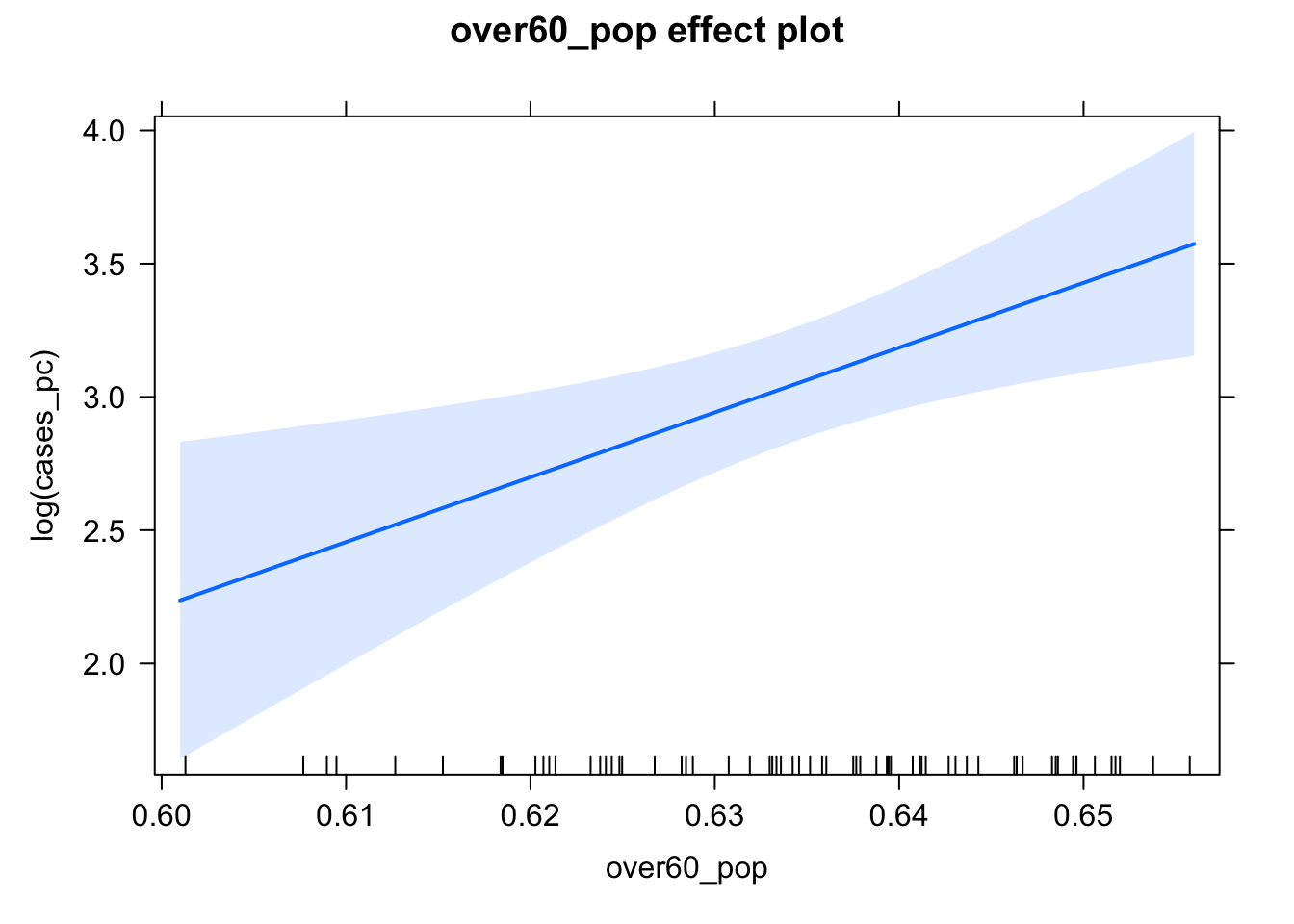

range(colo_dat$over60_pop)## [1] 0.6012898 0.6557599Given that it varies in such a small range, it might be worth trying to plot out the variable’s effect. There are a couple of different ways to do this. One is with the effects package that produces lattice plots of the effects. The other is with the ggeffects package, which makes it easy to make ggplot2 plots of the effect. Let’s start with the effects package.

You Try It!

Using the GSS 2016 data that we used in the previous chapter, estimate a regression of aid_scale on age, sei10, the log of realinc, partyid and sex.

- Make a nice looking table of the results.

3.1 Effects Plots

The Effect() function from the effects package allows us to plot the effect of a variable in a model. For example,

library(effects)

e <- Effect("over60_pop", mod,

xlevels=list(over60_pop = 50))

summary(e)##

## over60_pop effect

## over60_pop

## 0.601 0.602 0.604 0.605 0.606 0.607 0.608 0.609 0.61 0.611 0.612

## 2.236578 2.260898 2.309538 2.333858 2.358179 2.382499 2.406819 2.431139 2.455459 2.479780 2.504100

## 0.614 0.615 0.616 0.617 0.618 0.619 0.62 0.621 0.622 0.624 0.625

## 2.552740 2.577060 2.601381 2.625701 2.650021 2.674341 2.698661 2.722982 2.747302 2.795942 2.820262

## 0.626 0.627 0.628 0.629 0.63 0.631 0.632 0.634 0.635 0.636 0.637

## 2.844583 2.868903 2.893223 2.917543 2.941863 2.966184 2.990504 3.039144 3.063464 3.087785 3.112105

## 0.638 0.639 0.64 0.641 0.642 0.644 0.645 0.646 0.647 0.648 0.649

## 3.136425 3.160745 3.185065 3.209386 3.233706 3.282346 3.306666 3.330987 3.355307 3.379627 3.403947

## 0.65 0.651 0.652 0.654 0.655 0.656

## 3.428267 3.452588 3.476908 3.525548 3.549868 3.574189

##

## Lower 95 Percent Confidence Limits

## over60_pop

## 0.601 0.602 0.604 0.605 0.606 0.607 0.608 0.609 0.61 0.611 0.612

## 1.641520 1.681373 1.760876 1.800516 1.840072 1.879537 1.918903 1.958159 1.997295 2.036300 2.075158

## 0.614 0.615 0.616 0.617 0.618 0.619 0.62 0.621 0.622 0.624 0.625

## 2.152377 2.190699 2.228802 2.266659 2.304242 2.341518 2.378450 2.414995 2.451106 2.521804 2.556262

## 0.626 0.627 0.628 0.629 0.63 0.631 0.632 0.634 0.635 0.636 0.637

## 2.590032 2.623033 2.655180 2.686389 2.716572 2.745647 2.773542 2.825573 2.849650 2.872432 2.893947

## 0.638 0.639 0.64 0.641 0.642 0.644 0.645 0.646 0.647 0.648 0.649

## 2.914243 2.933384 2.951448 2.968518 2.984681 3.014627 3.028570 3.041923 3.054751 3.067112 3.079057

## 0.65 0.651 0.652 0.654 0.655 0.656

## 3.090632 3.101877 3.112828 3.133969 3.144211 3.154262

##

## Upper 95 Percent Confidence Limits

## over60_pop

## 0.601 0.602 0.604 0.605 0.606 0.607 0.608 0.609 0.61 0.611 0.612

## 2.831635 2.840423 2.858200 2.867201 2.876285 2.885460 2.894735 2.904119 2.913624 2.923260 2.933041

## 0.614 0.615 0.616 0.617 0.618 0.619 0.62 0.621 0.622 0.624 0.625

## 2.953104 2.963422 2.973960 2.984743 2.995800 3.007165 3.018873 3.030968 3.043497 3.070081 3.084262

## 0.626 0.627 0.628 0.629 0.63 0.631 0.632 0.634 0.635 0.636 0.637

## 3.099133 3.114773 3.131265 3.148697 3.167155 3.186720 3.207466 3.252715 3.277279 3.303137 3.330263

## 0.638 0.639 0.64 0.641 0.642 0.644 0.645 0.646 0.647 0.648 0.649

## 3.358607 3.388106 3.418683 3.450253 3.482730 3.550065 3.584763 3.620050 3.655862 3.692142 3.728838

## 0.65 0.651 0.652 0.654 0.655 0.656

## 3.765903 3.803298 3.840987 3.917127 3.955526 3.994115Note that in the summary above, it shows 50 values from the smallest to largest values of over60_pop along with their predictions and \(95\%\) confidence intervals. We can make a plot of those values with the plot() function:

plot(e)

In general, the effect package will unwind any functions or transformations of the independent variables, but not the dependent variable. So, we would have to do that ourselves. Doing this kind of a transformation is a bit difficult without some more intervention, so why don’t we move to the ggeffects package. We’ll make the same plot as above.

You Try It!

Use the effects package to make a plot of the the effect of realinc on aid_scale.

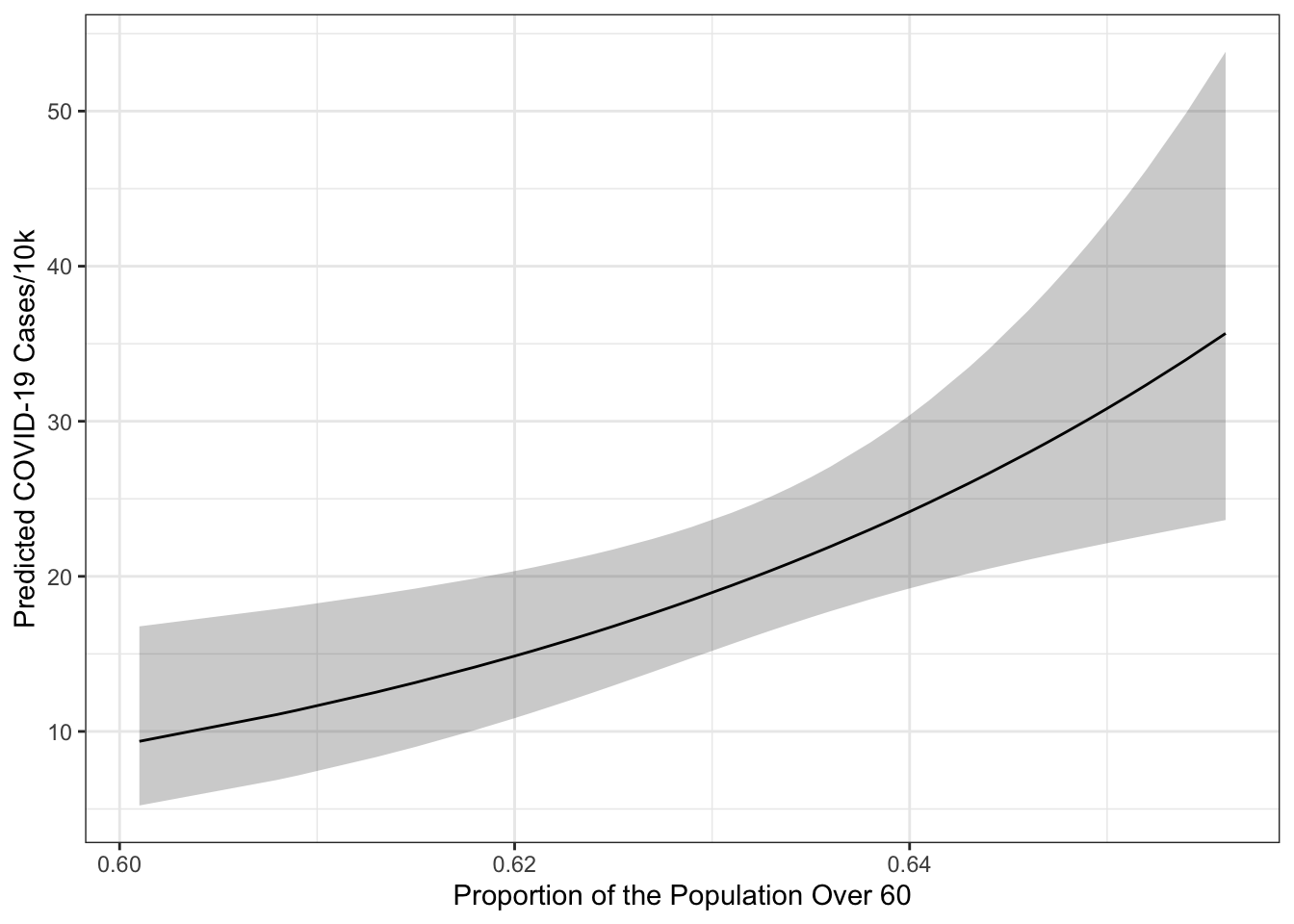

library(ggeffects)

library(ggplot2)

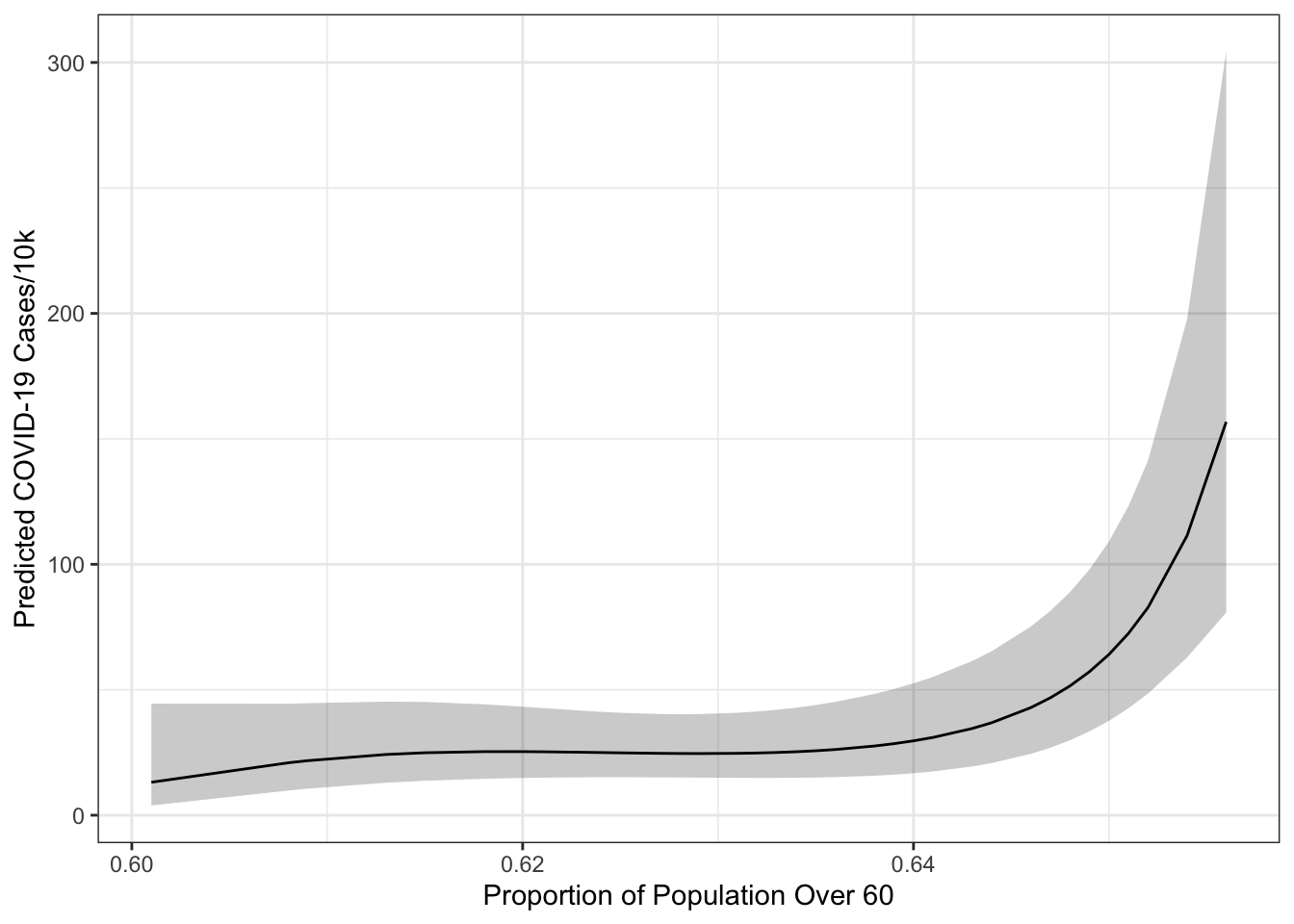

preds <- ggpredict(mod,

terms = "over60_pop [all]")## Model has log-transformed response. Back-transforming predictions to original response scale. Standard errors are still on the log-scale.ggplot(preds, aes(x=x, y=predicted)) +

geom_ribbon(aes(ymin=conf.low, ymax=conf.high),

alpha=.25) +

geom_line() +

theme_bw() +

labs(x="Proportion of the Population Over 60",

y="Predicted COVID-19 Cases/10k")

The terms= argument identifies the terms to move around. Everything else will be held constant at representative values. In particular over60_pop [all] means move the over60_pop variable and use all of its unique values to generate predictions. The output from ggpredict always names the first variable in terms= - x, the predictions predicted and the confidence bounds conf.low and conf.high. You may also note that a warning got printed indicating that the model had a log-transformed response and that the predictions and confidence intervals were back-transformed to the original scale of the \(y\)-variable (i.e., cases/10k rather than log(cases/10k)). The standard errors remain on the scale defined by the model rather than the original response scale. The confidence intervals are transformed using an end-point transformation which means that they are arrived at, in this case, on the log scale and then simply transformed by applying exp() to the bounds and fit.

You Try It!

Use the ggeffects package to replicate the graph you just made with the effects package.

To see how it works, let’s add a categorical variable - the three category metro, urban, rural variable should work. We’ll also add in the republican majority variable, too.

library(stringr)

colo_dat <- colo_dat %>%

mutate(mur = str_extract(urban_rural, "Metro|UP|Rural"),

mur = factor(mur, levels=c("Rural", "UP", "Metro")),

rep_maj = factor(repvote > .5,

levels=c(FALSE,TRUE),

labels=c("No", "Yes")))Now, we can estimate the model:

mod2 <- lm(log(cases_pc) ~ mur + rep_maj + over60_pop, data=colo_dat)

summary(mod2)##

## Call:

## lm(formula = log(cases_pc) ~ mur + rep_maj + over60_pop, data = colo_dat)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.76616 -0.53921 0.07446 0.43907 2.24993

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -13.5126 6.1012 -2.215 0.03072 *

## murUP -0.2121 0.2655 -0.799 0.42758

## murMetro -0.5382 0.3176 -1.695 0.09552 .

## rep_majYes -0.4404 0.2320 -1.898 0.06261 .

## over60_pop 26.9300 9.6773 2.783 0.00726 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.827 on 58 degrees of freedom

## Multiple R-squared: 0.2055, Adjusted R-squared: 0.1507

## F-statistic: 3.75 on 4 and 58 DF, p-value: 0.008826Here, we see a slightly bigger effect of over60_pop. If we wanted to test the significance of the term rather than the coefficient (i.e., all of the mur coefficients jointly equal to zero rather than independent tests), we could use that Anova() function from the car package.

library(car)

Anova(mod2)## Anova Table (Type II tests)

##

## Response: log(cases_pc)

## Sum Sq Df F value Pr(>F)

## mur 2.011 2 1.4706 0.238224

## rep_maj 2.465 1 3.6042 0.062614 .

## over60_pop 5.296 1 7.7440 0.007261 **

## Residuals 39.665 58

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1By default, you get a type II test, though you could get a type III test, by specifying type="III". To remind, the difference comes from how higher order terms are handled. Let’s imagine we’ve got the following model:

\[y = b_0 + b_1x + b_2z + b_3xz + b_4w + e\]

In this case, we have a higher order term (the interaction between \(x\) and \(z\)). A type II test would execute the following four tests:

- Is \(x\) significant controlling for \(z\) and \(w\), but not \(xz\).

- Is \(z\) significant controlling for \(x\) and \(w\), but not \(xz\).

- Is \(xz\) significant controlling for \(x\), \(z\) and \(w\).

- Is \(w\) significant controlling for \(x\), \(z\) and \(xz\).

A type III test would test each term controlling for all other terms, including higher order terms. This is more like what you would get from the regression output. Arguably, type II tests are more interesting because they don’t presume the existence of the higher order term in testing lower-order terms, thus making them appropriate for evaluating the significance of lower order terms independently of the higher order term.

3.2 Diagnostic Tools: Linearity

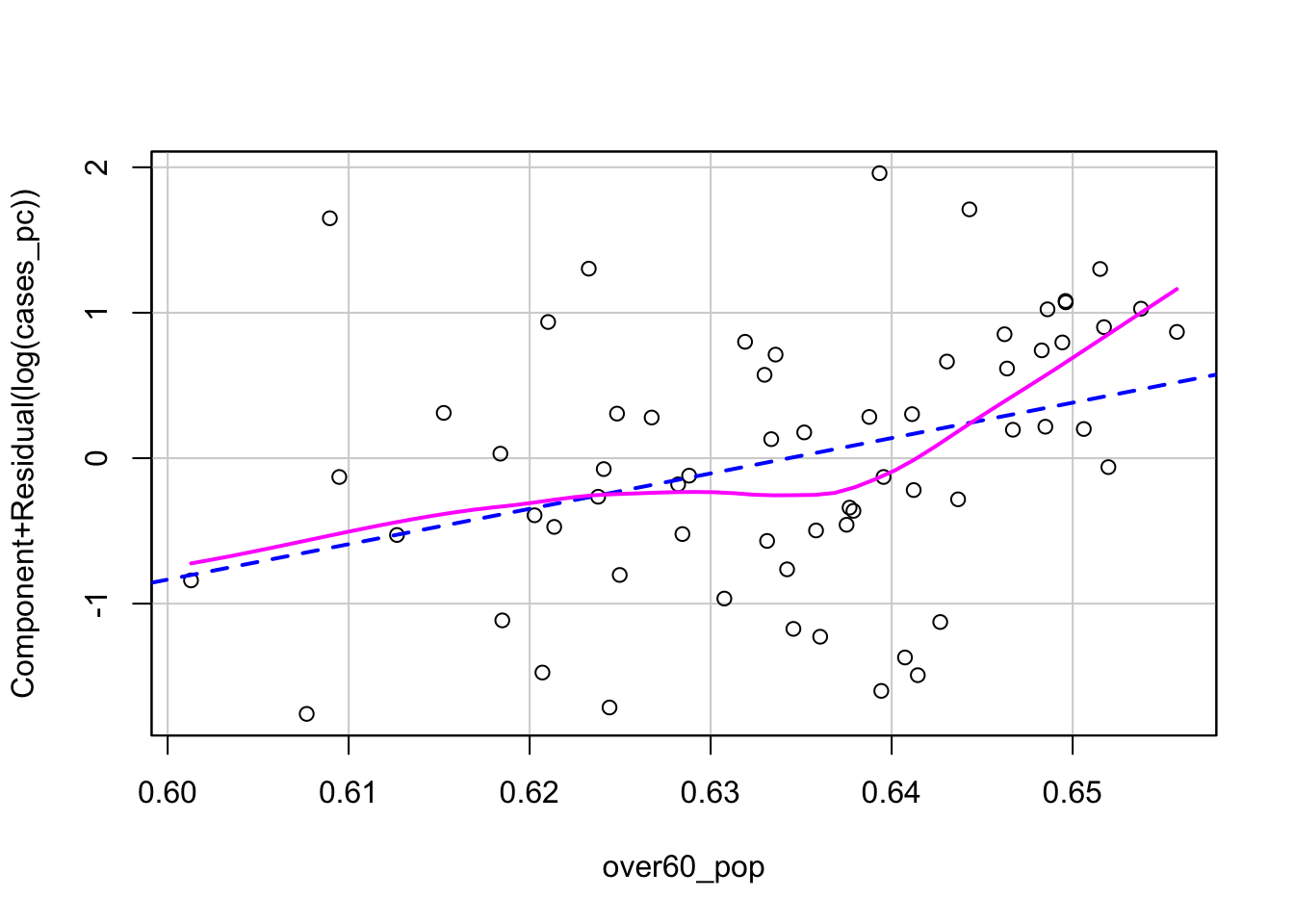

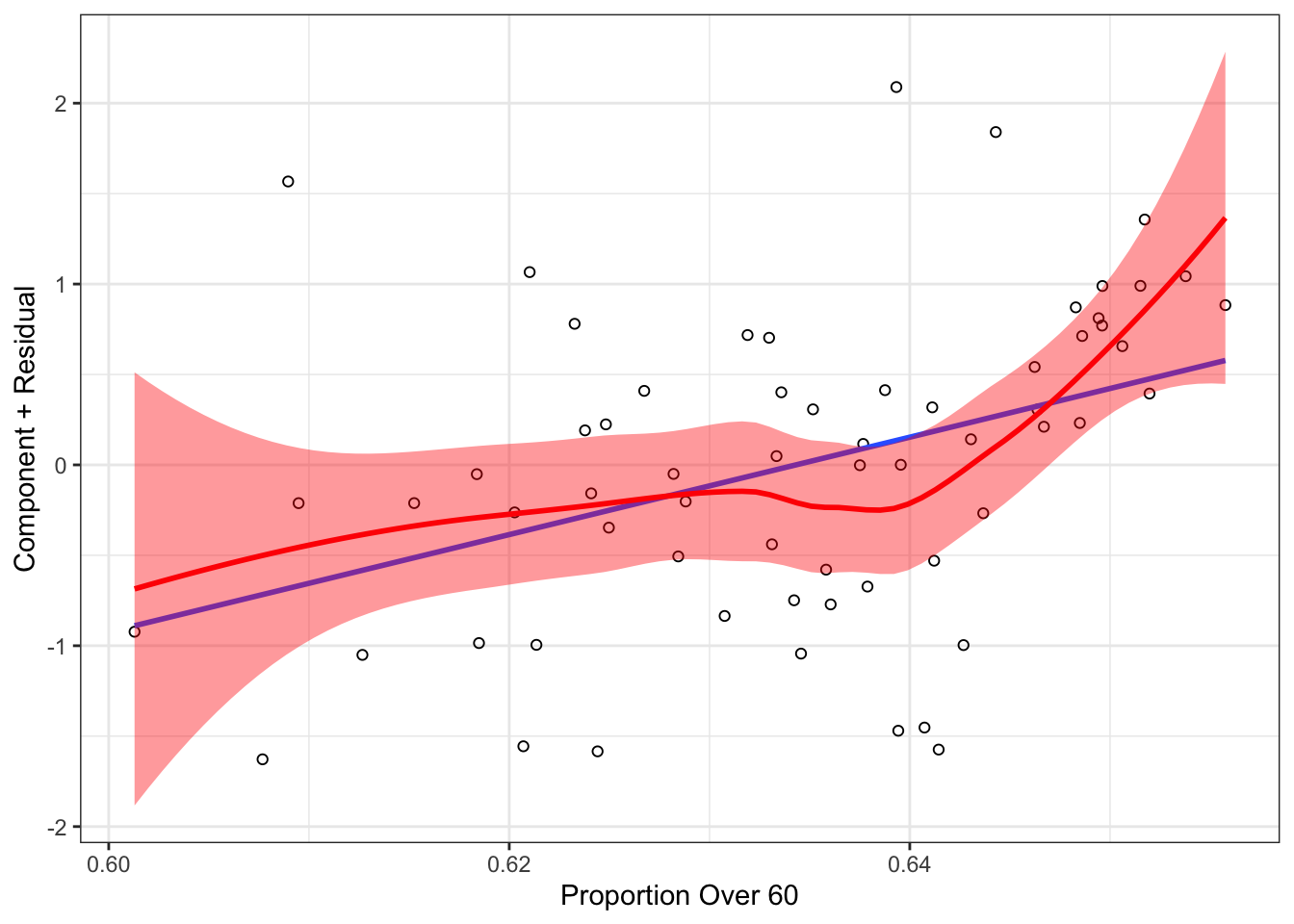

There are lots of diagnostic tools for the linear model. Perhaps one of the most useful is the component + residual plot. This can be produced with the crPlot() function in the car package. If we’ve got the following model:

\[\log(\text{Cases}) = b_0 + b_1\text{Urban} + b_2\text{Metro} + b_3\text{Repbilican Majority} + b_4\text{Over 60} + e\]

then the component plus residual plot for over60_pop would have the variable itself on the \(x\)-axis and on the \(y\)-axis would be \(b_4\text{Over 60} + e\) the component (the systematic part of the model relating to the variable of interest) plus the model residual. The line running through plot has a slope of \(b_4\). The pink line is a local polynomial regression fit to the points. This allows us to evaluate whether there are any un-modeled non-linearities in the data.

crPlot(mod, "over60_pop", smooth = list(smoother=loessLine,

smoother.args=list(var=TRUE))) We could try a couple of different potential solutions to the non-linearity exhibited in the plot. If we thought the non-linearity was simple and monotone, we could use a non-linear transformation. The Box-Tidwell transformation would be appropriate here. This model identifies the optimal power transformation to linearize the relationship.

We could try a couple of different potential solutions to the non-linearity exhibited in the plot. If we thought the non-linearity was simple and monotone, we could use a non-linear transformation. The Box-Tidwell transformation would be appropriate here. This model identifies the optimal power transformation to linearize the relationship.

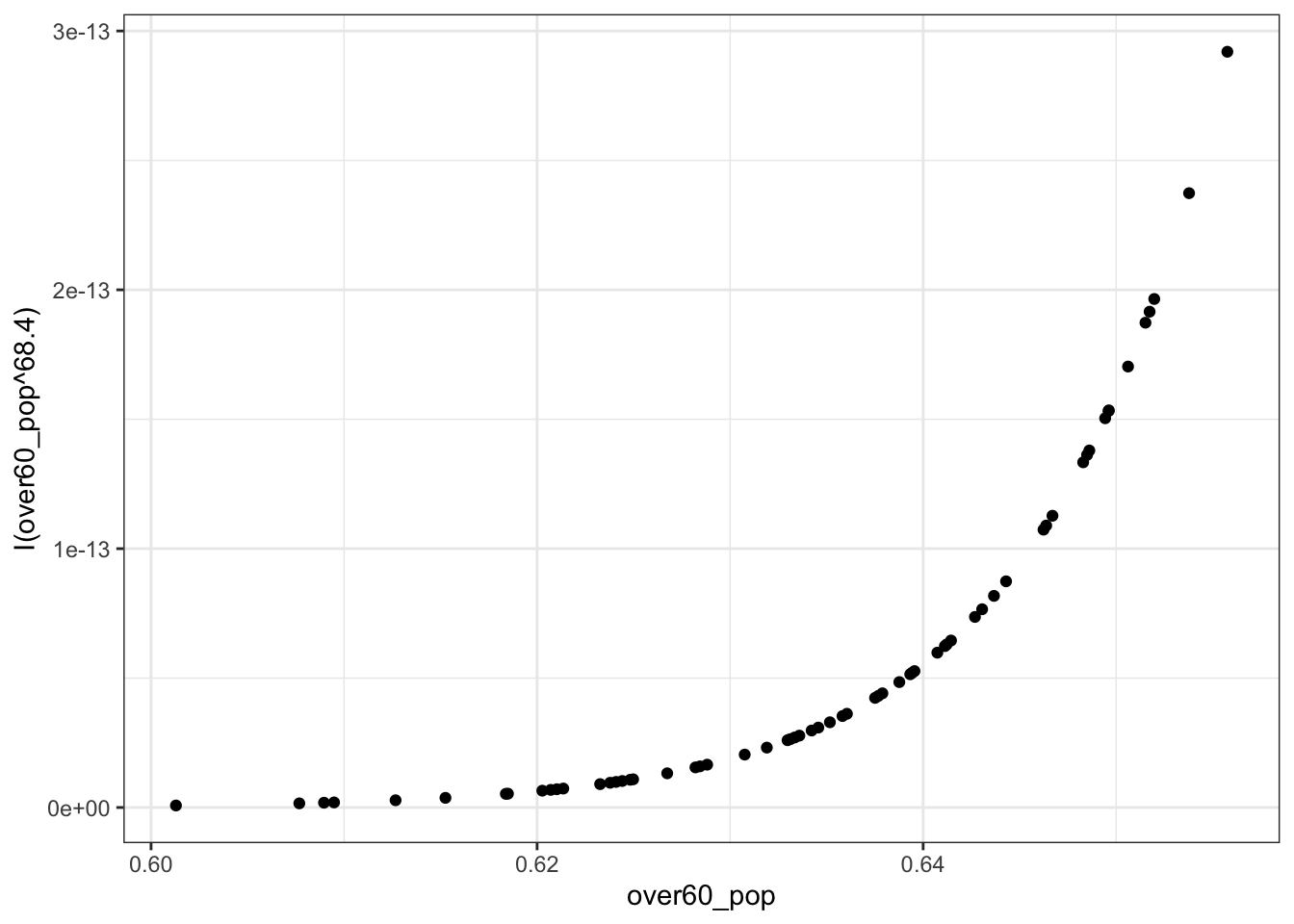

boxTidwell(log(cases_pc) ~ over60_pop, ~mur + rep_maj, data=colo_dat)## MLE of lambda Score Statistic (z) Pr(>|z|)

## 68.388 1.7388 0.08207 .

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## iterations = 4The proposed power transformation is \(x^68.4\). This is a big transformation. We could see what it looks like if we wanted.

ggplot(colo_dat, aes(x=over60_pop, y=I(over60_pop^68.4))) +

geom_point() +

theme_bw() However, the \(p\)-value indicates that the transformation isn’t necessary. Here, the null hypothesis is that no transformation is needed. If, instead, we thought that the non-nomotonicity in the CR Plot was interesting, we could estimate a polynomial. In R, polynomials are estimated with the

However, the \(p\)-value indicates that the transformation isn’t necessary. Here, the null hypothesis is that no transformation is needed. If, instead, we thought that the non-nomotonicity in the CR Plot was interesting, we could estimate a polynomial. In R, polynomials are estimated with the poly() function and by default are orthogonalized. This essentially implements a type II test for polynomial regressors. You can turn off the orthogonalization by specifying raw=TRUE as an argument to the function. This would implement a type III test for the polynomial regressors. For example the code below would estimate a third-degree polynomial in over60_pop:

You Try It!

Use the methods discussed above to evaluate linearity for age, sei10 and realinc. For realinc see whether the log is just as good as other transformations.

mod2b <- lm(log(cases_pc) ~ mur + rep_maj + poly(over60_pop, 3), data=colo_dat)

summary(mod2b)##

## Call:

## lm(formula = log(cases_pc) ~ mur + rep_maj + poly(over60_pop,

## 3), data = colo_dat)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.55831 -0.49263 0.06746 0.34319 2.11012

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.4827 0.2504 13.907 < 2e-16 ***

## murUP -0.1239 0.2600 -0.477 0.63549

## murMetro -0.5923 0.3089 -1.917 0.06030 .

## rep_majYes -0.3427 0.2286 -1.499 0.13947

## poly(over60_pop, 3)1 2.8907 0.9553 3.026 0.00374 **

## poly(over60_pop, 3)2 1.5346 0.8290 1.851 0.06942 .

## poly(over60_pop, 3)3 1.3208 0.8174 1.616 0.11176

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.8014 on 56 degrees of freedom

## Multiple R-squared: 0.2797, Adjusted R-squared: 0.2025

## F-statistic: 3.623 on 6 and 56 DF, p-value: 0.004164As you can see, the second and third order terms are not statistically significant, indicating that the linear relationship is sufficient. These findings make sense if we include the confidence bounds around the loess curve in the CR plot. There is a bug in the crPlot() function that doesn’t allow us to do this, but we could make it “by hand” with ggplot2. In the code below, the augment() function comes from the broom package and it puts model results (like fitted values and residuals) in a data frame with the original variables in the model. In addition, we’re obtaining the partial residuals for the over60_pop variable and adding those into the data.

library(broom)

aug <- augment(mod2, data=colo_dat) %>%

mutate(p.resid = residuals(mod2, type="partial")[,"over60_pop"])

ggplot(aug, aes(x=over60_pop, y=p.resid)) +

geom_point(shape=1) +

geom_smooth(method="lm",

se=FALSE)+

geom_smooth(method="loess",

se=TRUE,

col="red",

fill=rgb(1,0,0,.25, maxColorValue = 1)) +

theme_bw() +

labs(x="Proportion Over 60",

y="Component + Residual")

In the plot above, you can see that the linear model line is generally within the confidence bounds of the loess curve, making it unsurprising that potential fixes to the non-linearity problem were not necessary.

3.3 Diagnostic Tools: Heteroskedasticity

There are tools for detecting heteroskedasticity. The car package has a function called ncvTest() which estimates a score test of the residuals. It starts by calculating the standardized squared residuals

\[U_{i} = \frac{E_{i}^{2}}{\hat{\sigma}^{2}} = \frac{E_{i}^{2}}{\frac{\sum E_{i}^{2}}{n}}\]

Then, it regress the \(U_{i}\) on all of the explanatory variable \(X\)’s or the fitted values \(\hat{y}\), finding the fitted values:

\[U_{i} = \eta_{0} + \eta_{1}X_{i1} + \cdots + \eta_{p}X_{ip} + \omega_{i}\]

The score is then calculated as:

\[S_{0}^{2} = \frac{\sum(\hat{U}_{i} - \bar{U})^{2}}{2}\]

and \(S_{0}^{2}\) is distributed as \(\chi^{2}\) with \(p\) degrees of freedom. The results for our model are below:

ncvTest(mod2)## Non-constant Variance Score Test

## Variance formula: ~ fitted.values

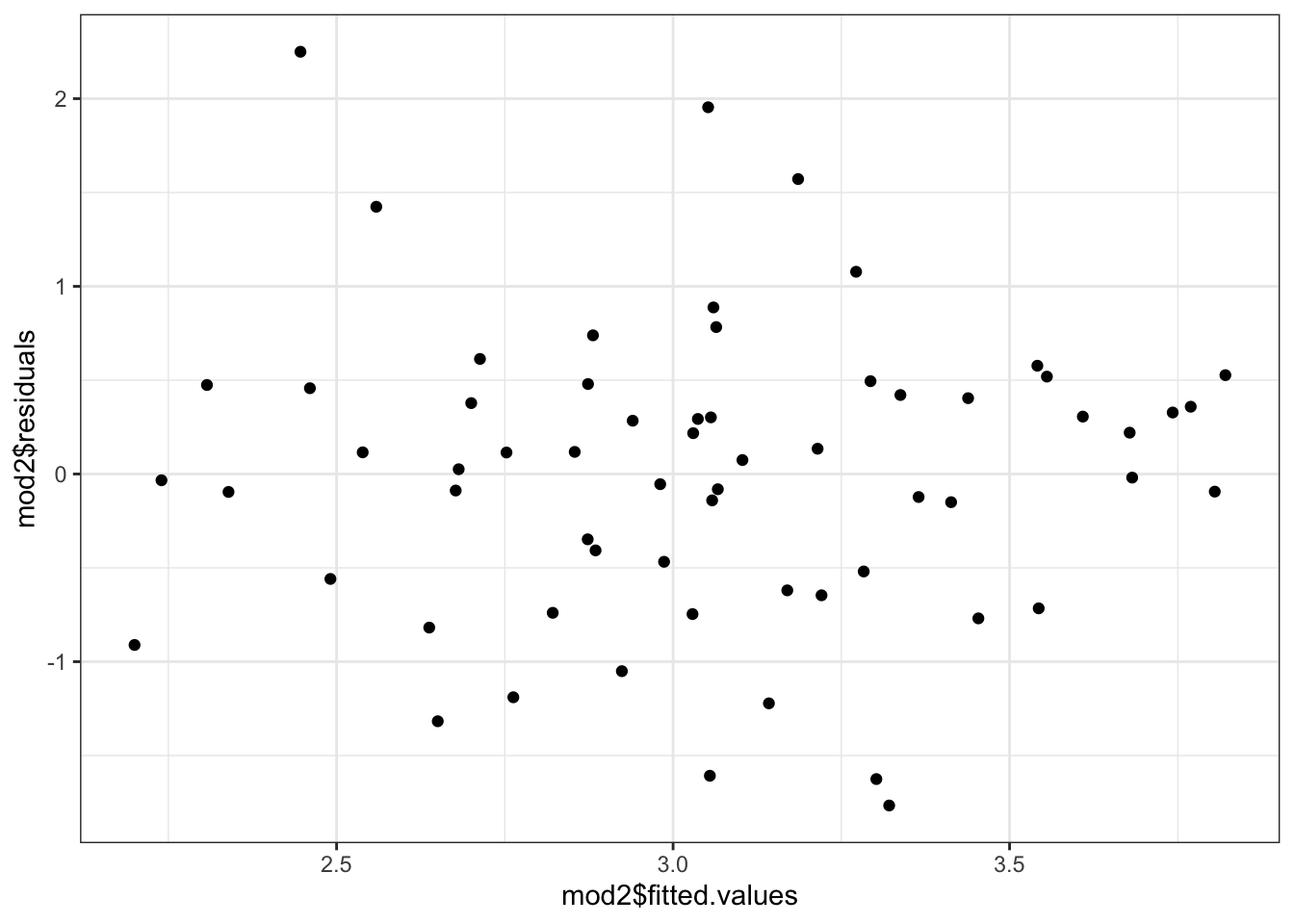

## Chisquare = 1.291396, Df = 1, p = 0.25579Here, we see not much evidence of heteroskedasticity, but it is probably worth looking at the residuals vs fitted plot anyway.

ggplot(mapping=aes(x=mod2$fitted.values,

y=mod2$residuals)) +

geom_point() +

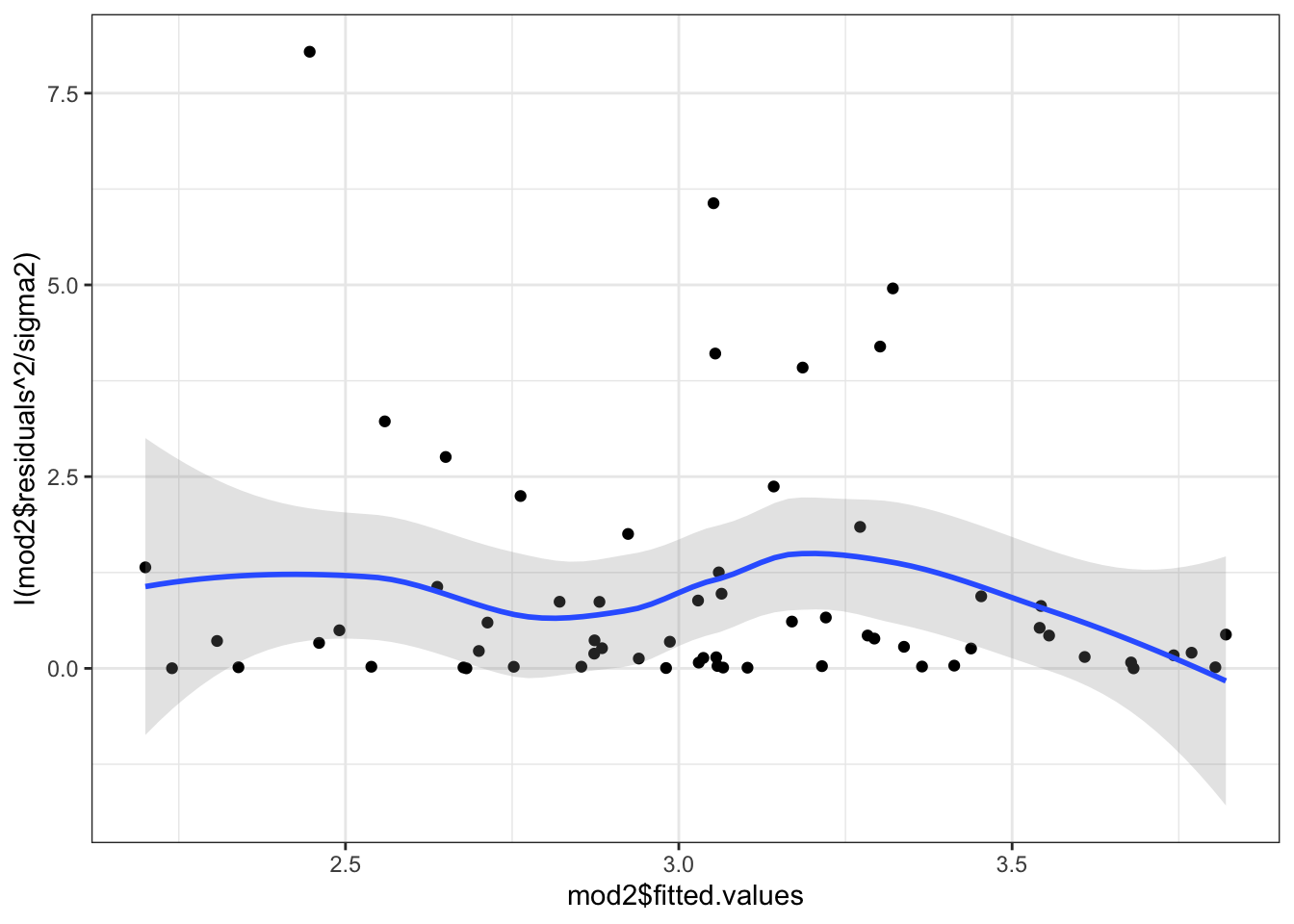

theme_bw() Or, we could actually plot out the standardized squared residuals against the fitted values.

Or, we could actually plot out the standardized squared residuals against the fitted values.

sigma2 <- sum(mod2$residuals^2)/nobs(mod2)

ggplot(mapping=aes(x=mod2$fitted.values,

y=I(mod2$residuals^2/sigma2))) +

geom_point() +

geom_smooth(se=TRUE, alpha=.25) +

theme_bw()

In this case, even though the test was not significant, we would probably want to “fix” the problem just the same. In fact, the test is known to have relatively low power in small samples. The advice then is to “fix” the problem if there is any hint of heteroskedasticity even if the test isn’t conclusive.

One of the most common fixes is to use robust standard errors. Robust standard errors can be calculated to compensate for an unknown pattern of non-constant error variance. They do not change the OLS coefficient estimates or solve the inefficiency problem, but do give more accurate \(p\)-values in the presence of the problem. These come in lots of different “flavors,” most of which are variants on the method originally proposed by White (1980).

The covariance matrix of the OLS estimator is: \begin{eqnarray*}

\[\begin{aligned} V(\mathbf{b}) &= \mathbf{(X^{\prime}X)^{-1}X^{\prime}\Sigma X(X^{\prime}X)^{-1}}\\ &= \mathbf{(X^{\prime}X)^{-1}X^{\prime}}V(\mathbf{y})\mathbf{ X(X^{\prime}X)^{-1}} \end{aligned}\]

Where \(V(\mathbf{y}) = \sigma_{\varepsilon}^{2}\mathbf{I}_{n}\) if the assumption of homoskedasticity is satisfied. The variance simplifies to:

\[V(\mathbf{b}) = \sigma_{\varepsilon}^{2}(\mathbf{X^{\prime}X})^{-1}\]

In the presence of non-constant error variance, however, \(V(\mathbf{y})\) contains nonzero covariance and unequal variance. In these cases, White suggests a consistent estimator of the variance that constrains \(\mathbf{\Sigma}\) to a diagonal matrix containing only squared residuals. The heteroskedasticity consistent covariance matrix (HCCM) estimator is then:

\[V(\mathbf{b}) = \mathbf{(X^{\prime}X)^{-1}X^{\prime}\hat{\Phi}X (X^{\prime}X)^{-1}}\]

where \(\mathbf{\hat{\Phi}} = e^{2}_{i}\mathbf{I}_{n}\) and the \(e_{i}\) are the OLS residuals

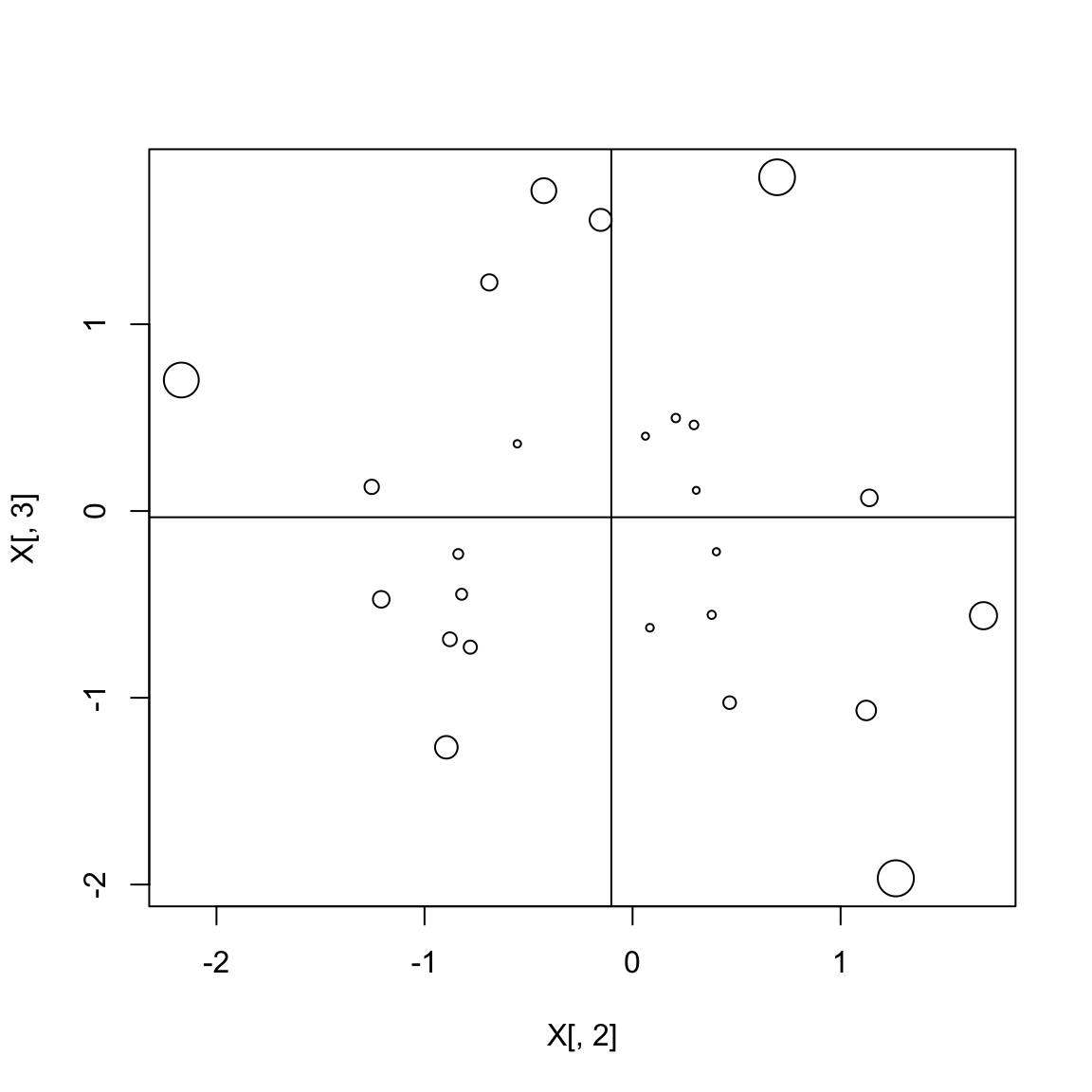

These are known as HC0 robust standdard errors. Other HCCMs use the “hat value” which are the diagonal elements of \(\mathbf{X}\left(\mathbf{X}^{\prime}\mathbf{X}\right)^{-1}\mathbf{X}^{\prime}\)

These give a sense of how far each observation is from the mean of the X’s. Below is a figure that shows two hypothetical \(X\) variables and the plotting symbols are proportional in size to the hat value

set.seed(123)

X <- cbind(1, MASS::mvrnorm(25, c(0,0), diag(2)))

h <- diag(X%*% solve(t(X)%*%X)%*%t(X))

plot(X[,2], X[,3], cex = h*10)

abline(h=mean(X[,3]), v=mean(X[,2]))

MacKinnon and White (1985) considered three alternatives: HC1, HC2 and HC3, each of which offers a different method for finding \(\mathbf{\Phi}\).

- HC1: \(\frac{N}{N-K}\times\text{HC0}\).

- HC2: \(\hat{\mathbf{\Phi}} = \text{diag}\left[\frac{e_{i}^{2}}{1-h_{ii}}\right]\) where \(h_{ii} = \mathbf{x}_{i}(\mathbf{X}^{\prime}\mathbf{X})^{-1}\mathbf{x}_{i}^{\prime}\)

- HC3: \(\hat{\mathbf{\Phi}} = \text{diag}\left[\frac{e_{i}^{2}}{(1-h_{ii})^{2}}\right]\)

HC3 standard errors are shown to outperform the alternatives in small samples, but can still fail to generate the appropriate Type I error rate when outliers are present. HC4 standard errors can produce the appropriate test statistics even in the presence of outliers:

\[\hat{\mathbf{\Phi}} = \text{diag}\left[\frac{e_{i}^{2}}{(1-h_{ii})^{\delta_{i}}}\right]\]

where \(\delta_{i} = min\left\{4, \frac{N h_{ii}}{p}\right\}\) with \(n\) = number of obs, and \(p\) = number of parameters in model.

HC4 outperform HC3 in the presence of influential observations, but not in other situations. HC4 standard errors are not universally better than others and as Cribari-Neto and da Silva (2011) show, HC4 SEs have relatively poor performance when there are many regressors and when the maximal leverage point is extreme. Cribari-Neto and da Silva propose a modified HC4 estimator, called HC4m, where, as above

\[\hat{\mathbf{\Phi}} = \text{diag}\left[\frac{e_{i}^{2}}{(1-h_{ii})^{\delta_{i}}}\right]\]

and here, \(\delta_{i} = min\left\{\gamma_{1}, \frac{nh_{ii}}{p}\right\} + min\left\{\gamma_{2}, \frac{nh_{ii}}{p}\right\}\)

They find that the best values of the \(\gamma\) parameters are \(\gamma_{1}=1\) and \(\gamma_{2}=1.5\). HC5 standard errors are supposed to also provide different discounting than HC4 and HC4m estimators. The HC5 standard errors are operationalized as:

\[\hat{\mathbf{\Phi}} = \text{diag}\left[\frac{e_{i}^{2}}{(1-h_{ii})^{\delta_{i}}}\right]\]

and here, \(\delta_{i} = min\left\{\frac{nh_{ii}}{p}, max\left\{4, \frac{nkh_{max}}{p}\right\}\right\}\) with \(k=0.7\).

For observations with bigger hat-values, their residuals get increased in size, thus increasing the standard error (generally).

The coeftest() function in the lmtest package allows you to specify any of these HCCMs.

library(lmtest)

library(sandwich)

coeftest(mod2, vcov. = vcovHC, type="HC5")##

## t test of coefficients:

##

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -13.51258 6.65537 -2.0303 0.04692 *

## murUP -0.21211 0.29742 -0.7132 0.47860

## murMetro -0.53818 0.28915 -1.8612 0.06778 .

## rep_majYes -0.44041 0.19428 -2.2669 0.02714 *

## over60_pop 26.93005 10.55143 2.5523 0.01336 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1You Try It!

Now, re-estimate the model with the appropriate functional forms. - Test the model for heteroskedasticity problems. - Use robust standard errors to test model coefficients. - How do results change as you change the type of HCCM?

Notice that with the HC5 standard errors, the polynomial in over60_pop is significant. We could use this to provide information to the ggpredict() and stargazer() as well.

g <- ggpredict(mod2b,

terms="over60_pop [all]",

vcov.fun="vcovHC",

vcov.type="HC5")

ggplot(g, aes(x=x, y=predicted)) +

geom_ribbon(aes(ymin=conf.low, ymax=conf.high),

alpha=.25) +

geom_line() +

theme_bw() +

labs(x="Proportion of Population Over 60",

y="Predicted COVID-19 Cases/10k")

We could also provide these to the stargazer() function.

library(stargazer)

ct <- coeftest(mod2b, vcov.=vcovHC(mod2b, type="HC5"))

stargazer(mod2b, type="html",

style="ajps",

covariate.labels=c(

"Urban Area",

"Metro Area",

"Republican Majority (0/1)",

"Over 60 Population",

"Over 60 Population Squared",

"Over 60 Population Cubed",

"Intercept"),

se = list(ct[,2]),

t = list(ct[,3]),

p = list(ct[,4]),

star.cutoffs=.05,

star.char = "`*`",

notes="`*` p < .05",

notes.append=FALSE)| log(cases_pc) | |

| Urban Area | -0.124 |

| (0.298) | |

| Metro Area |

-0.592*

|

| (0.259) | |

| Republican Majority (0/1) | -0.343 |

| (0.181) | |

| Over 60 Population |

2.891*

|

| (1.019) | |

| Over 60 Population Squared | 1.535 |

| (0.773) | |

| Over 60 Population Cubed |

1.321*

|

| (0.647) | |

| Intercept |

3.483*

|

| (0.228) | |

| N | 63 |

| R-squared | 0.280 |

| Adj. R-squared | 0.202 |

| Residual Std. Error | 0.801 (df = 56) |

| F Statistic |

3.623* (df = 6; 56)

|

* p < .05

|

|

There are some other options, like variance modeling (e.g., heteroskedastic regression), weighted least squares (WLS) or feasible generalized least squares (FGLS), too. The latter two you would do by specifying a weight= argument to the linear model where the weight is the variable that is proportional to the scale of the residuals. The former requires a maximum likelihood estimator that allows you to simultaneously parameterize the mean and variance (though this is done easily with the gamlss package).

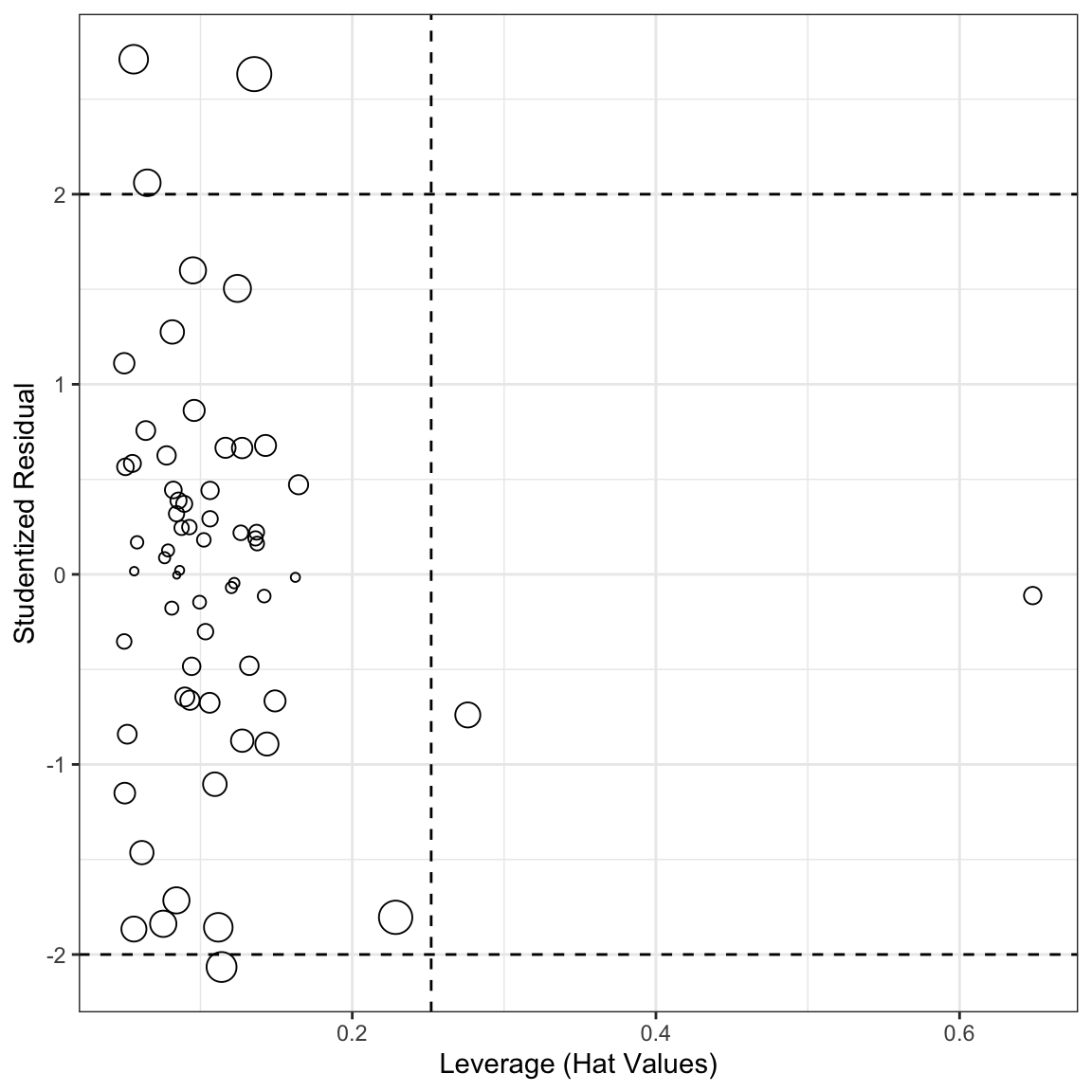

3.4 Diagnostics: Outliers and Influential Data

There are lots of diagnostics for outliers and influential data as well. First, it is worth noting that influential points are those that have both leverage and discrepancy. Points with high leverage are those that are far away from the center of the distribution of the \(X\) variables. Points with discrepancy are those with large residuals.

The most common measure of leverage is the \(hat-value\), \(h_i\). The name \(hat-values\) results from their calculation based on the fitted values (\(\hat{Y}\)):

\[\begin{aligned} \hat{Y}_{j} &= h_{1j}Y_{1} + h_{2j}Y_{2} + \cdots + h_{nj}Y_n\\ &= \sum_{i=1}^{n}h_{ij}Y_{i} \end{aligned}\]

Recall that the Hat Matrix, \(\mathbf{H}\), projects the \(Y\)’s onto their predicted values:

\[\begin{aligned} \mathbf{\hat{y}} &= \mathbf{Xb}\\ &= \mathbf{X(X^{\prime}X)^{-1}X^{\prime}y}\\ &= \mathbf{Hy}\\ \underset{(n\times n)}{\mathbf{H}} &= \mathbf{X(X^{\prime}X)^{-1}X^{\prime}} \end{aligned}\]

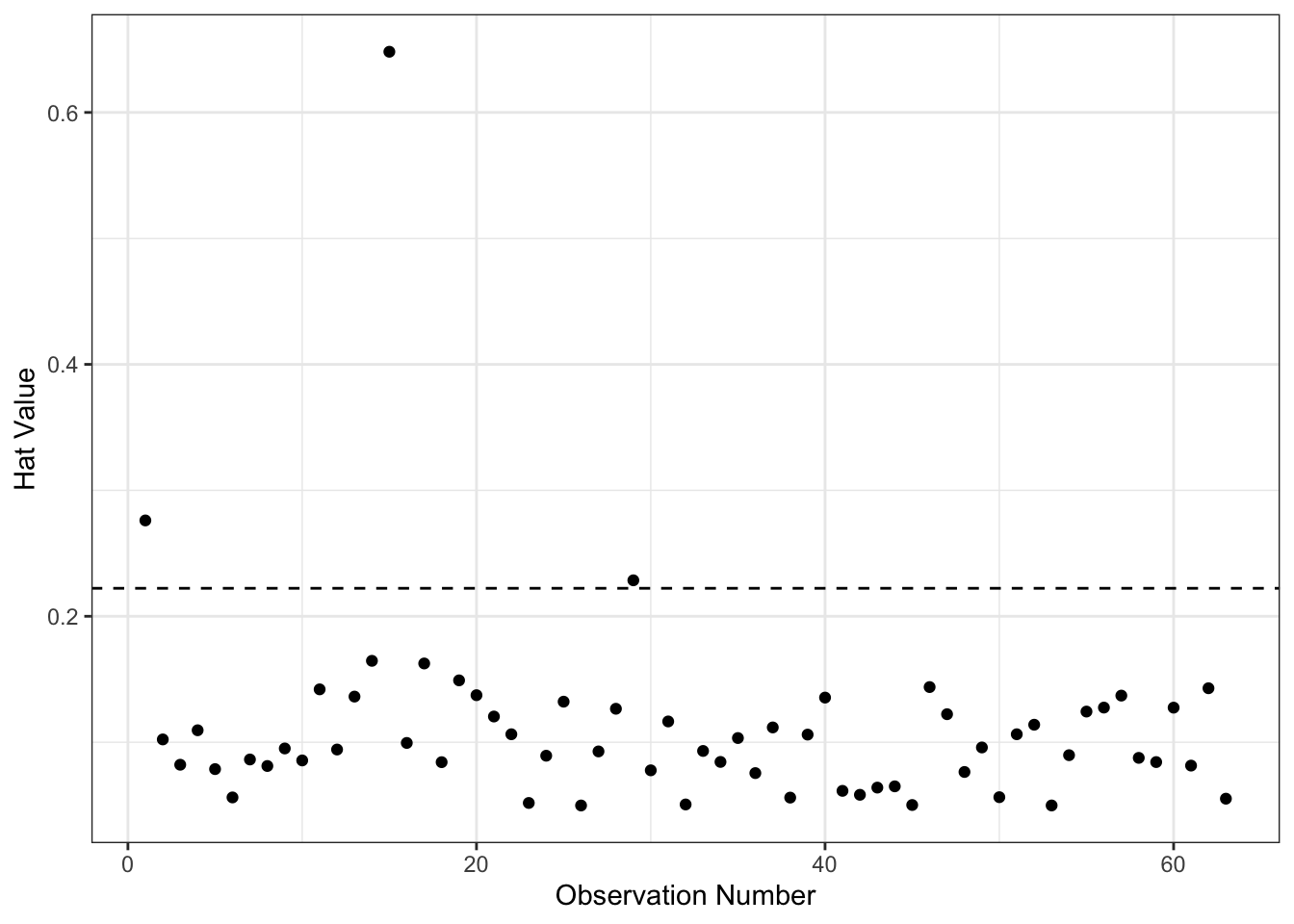

If \(h_{ij}\) is large, the \(i^{th}\) observation has a substantial impact on the \(j^{th}\) fitted value. Since \(\bm{H}\) is symmetric and idempotent, the hat value \(h_{i}\) measures the potential leverage of \(Y_{i}\) on all the fitted values. In multiple regression, \(h_i\) measures the distance from the centroid point of all of the \(X\)’s (point of means). Hat values range from \(\frac{1}{n}\) to 1 with a mean of \(\frac{k+1}{n}\). Values more than twice the mean are considered “big,” though this is not a formal test.

We could make a plot of the hat values.

hbar <- mod2b$rank/nobs(mod2b)

ggplot(mapping=aes(x=1:nobs(mod2b), y=hatvalues(mod2b))) +

geom_point() +

geom_hline(yintercept=2*hbar, lty=2) +

theme_bw() +

labs(x="Observation Number",

y="Hat Value") As you can see above, there are a few points with higher than expected hat values. We could find them as follows:

As you can see above, there are a few points with higher than expected hat values. We could find them as follows:

aug2b <- augment(mod2b) %>%

mutate(obs = 1:nobs(mod2b))

aug2b %>% filter(.hat > 2*hbar) %>%

select(`log(cases_pc)`, mur, rep_maj, .hat, obs)## # A tibble: 3 x 5

## `log(cases_pc)` mur rep_maj .hat obs

## <dbl> <fct> <fct> <dbl> <int>

## 1 3.91 Metro No 0.276 1

## 2 2.21 Rural Yes 0.648 15

## 3 1.29 UP Yes 0.229 29So, observations 1, 15 and 29 are the ones with high leverage. Now remember, they are not necessarily influential because we don’t know whether they are also models with big residuals.

Unusual observations typically have large residuals but not necessarily so - high leverage observations can have small residuals because they pull the line towards them:

\[E_{i}) = \sigma^{2}_{\varepsilon}(1-h_{i})\]

Standardized residuals provide one possible, though unsatisfactory, way of detecting outliers: \[E_{i}^{\prime} = \frac{E_{i}}{S_{E}\sqrt{1-h_{i}}}\]

The numerator and denominator are not independent and thus \(E_{i}^{\prime}\) does not follow a \(t\)-distribution: If \(\mid E_{i} \mid\) is large, the standard error is also large:

\[S_{E} = \sqrt{\frac{\sum E_{i}^{2}}{n-k-1}}\]

However, if we refit the model deleting the \(i^{th}\) observation we obtain an estimate of the standard deviation of the residuals \(S_{E(-i)}\) (standard error of the regression) that is based on the \(n-1\) observations. We then calculate the studentized residuals \(E_{i}^{*}\)’s, which have an independent numerator and denominator:

\[E_{i}^{*} = \frac{E_{i}}{S_{E(-i)}\sqrt{1-h_{i}}}\]

Studentized residuals follow a \(t\)-distribution with \(n-k-2\) degrees of freedom. Observations that have a studentized residual outside the \(\pm 2\) range are considered statistically significant at the 95% level. Since we are looking for the furthest outliers, it is not legitimate to use a simple \(t\)-test. We would expect that \(5\%\) of the studentized residuals would be beyond \(t_{.025}\pm2\) by chance alone. To remedy this we can make a Bonferroni adjustment to the \(p\)-value. The Bonferroni \(p\)-value for the largest outlier is: \(p=2np^{\prime}\) where \(p^{\prime}\) is the unadjusted \(p\)-value from a \(t\)-test with \(n-k-2\) degrees of freedom. The outlierTest() function in the car package gives Bonferroni \(p\)-value for the largest absolute studentized residual

outlierTest(mod2b)## No Studentized residuals with Bonferroni p < 0.05

## Largest |rstudent|:

## rstudent unadjusted p-value Bonferroni p

## 38 2.881524 0.0056334 0.35491Note from the above that the adjusted \(p\)-value suggests that we do not have significant outliers.

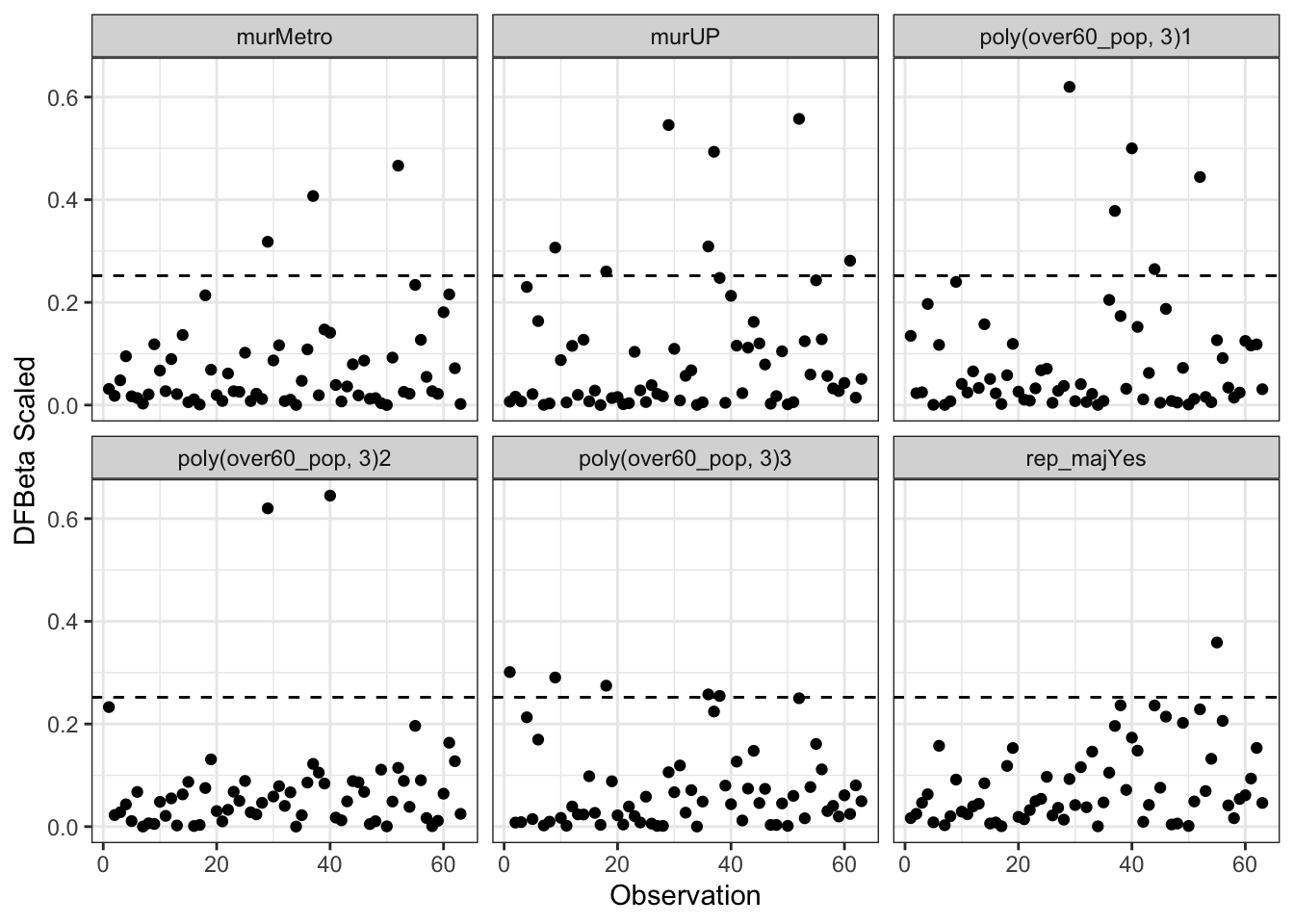

Recall that influential observations are those that have both discrepancy and leverage. There are a few different ways of measuring this. One common way is with DFBeta - a difference in the coefficient induced by removing a single observation.

\[D_{ij} = B_{j} - B_{j(-i)}\quad \forall \quad i=1, \ldots, n; \quad j=1, \ldots, k\]

The \(B_{j}\) are the coefficients for all the data and the \(B_{j(-i)}\) are the coefficients for the same model with the \(i^{th}\) observation removed. A standard cut-off for an influential observation is: \(D_{ij} \geq \frac{2}{\sqrt{n}}\).

The dfbeta() function calculates these values for us. There is a scaled version that permits more reasonable comparison:

\[D_{ij}^{(s)} = \frac{B_j - B_{j(-i)}}{s_{E(-i)}\sqrt{(\mathbf{X}^{\prime}\mathbf{X})_{jj}}}\]

The main problem with the \(D_{ij}\) and \(D_{ij}^{(s)}\) is that it produces a value for every observation and coefficient sometimes requiring lots of investigation. We could plot them in R with:

Ds <- dfbetas(mod2b)Before we plot them, we’ll arrange them in log format with the pivot_longer() function from the tidyr package. In the plot below, we should be looking for values bigger than \(\frac{2}{\sqrt{n}}\). We can see below that several of the coefficients appear to be affected by some influential observations.

library(tidyr)

Ds <- Ds %>%

as.data.frame %>%

select(-1) %>%

mutate(obs = 1:nobs(mod2b)) %>%

pivot_longer(cols = -obs,

names_to = "var",

values_to="value")

ggplot(Ds, aes(x=obs, y=abs(value))) +

geom_point() +

geom_hline(yintercept=2/sqrt(nobs(mod2b)),

lty=2) +

facet_wrap(~var) +

theme_bw() +

labs(x="Observation",

y="DFBeta Scaled")

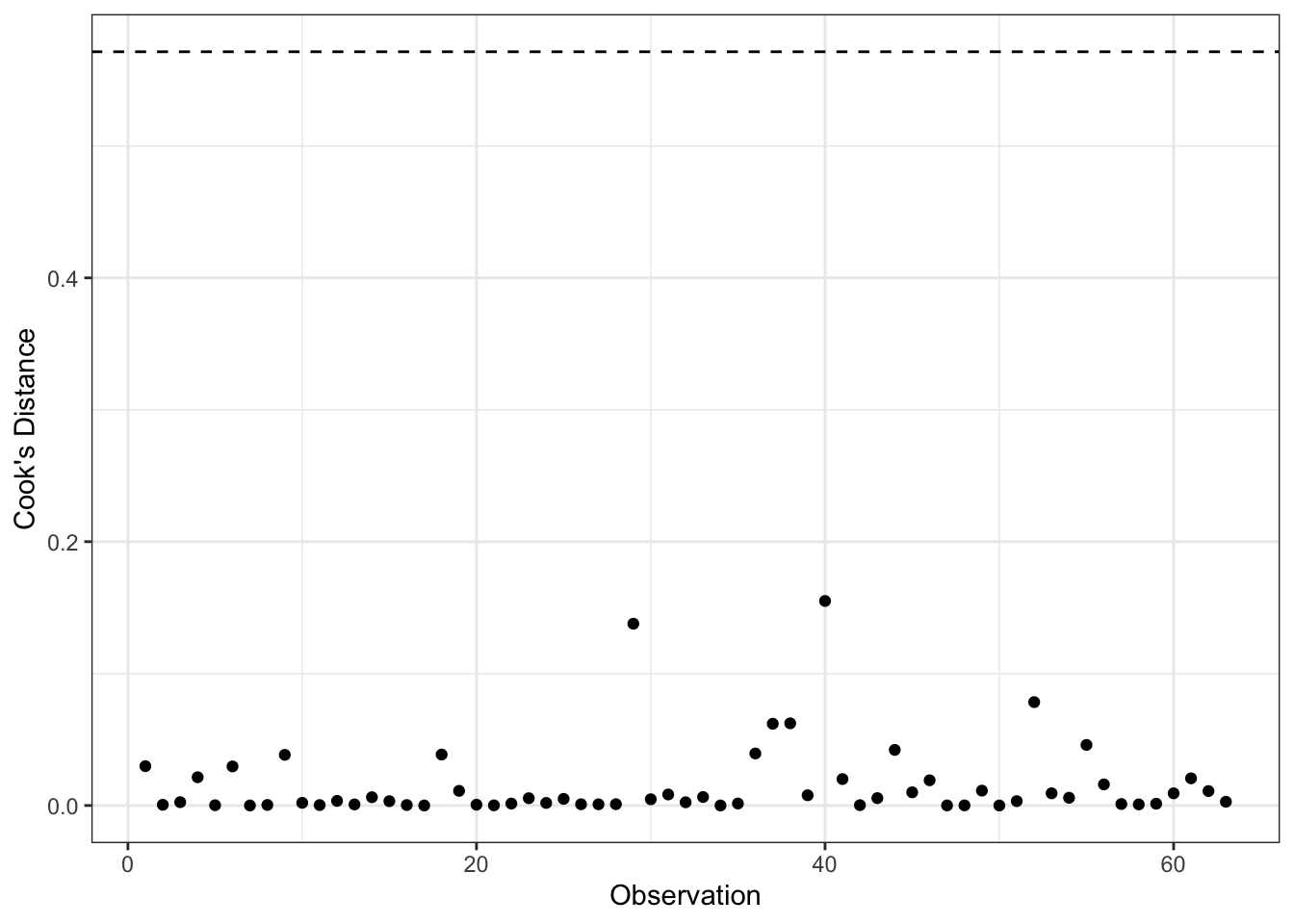

Cook’s D measures the distance between \(B_j\) and \(B_{j(-i)}\) by calculating an \(F\)-statistic for the hypothesis that \(B_j=B_{j(-i)}\), for \(j=0,1,\ldots,k\). An \(F\)-test is calculated for each observation as follows:

\[D_{i} = \frac{E_{i}^{\prime 2}}{k+1} \times \frac{h_{i}}{1-h_{i}}\]

where \(h_{i}\) is the hat value for each observation and \(E_{i}^{\prime}\) is the standardized residual. The first fraction measures discrepancy; the second fraction measures leverage. There is no significance test for \(D_i\) (i.e., the \(F\)-statistic here measures only distance) but a commonly used cut-off is: \(D_{i} > \frac{4}{n-k-1}\)

ggplot(aug2b, aes(x=obs, y=.cooksd)) +

geom_point() +

geom_hline(yintercept=4/mod2b$rank,

lty=2) +

theme_bw() +

labs(x="Observation",

y="Cook's Distance")

We could plot many of these diagnostics together in a “bubble plot.”

ggplot(aug2b, aes(x=.hat, y=.std.resid, size=sqrt(.cooksd))) +

geom_point(show.legend = FALSE, shape=1) +

geom_hline(yintercept=c(-2,2), lty=2) +

geom_vline(xintercept=2/sqrt(nobs(mod2)), lty=2) +

theme_bw() +

labs(x="Leverage (Hat Values)",

y="Studentized Residual")

Finally, we could evaluate the potential for joint influence. Subsets of cases can jointly influence a regression line, or can offset the influence of other points. Cook’s D can help us determine joint influence if there are relatively few influential cases. That is, we can delete cases sequentially, updating the model each time and exploring the Cook’s D’s again. This approach is impractical if there are potentially a large number of subsets to explore.

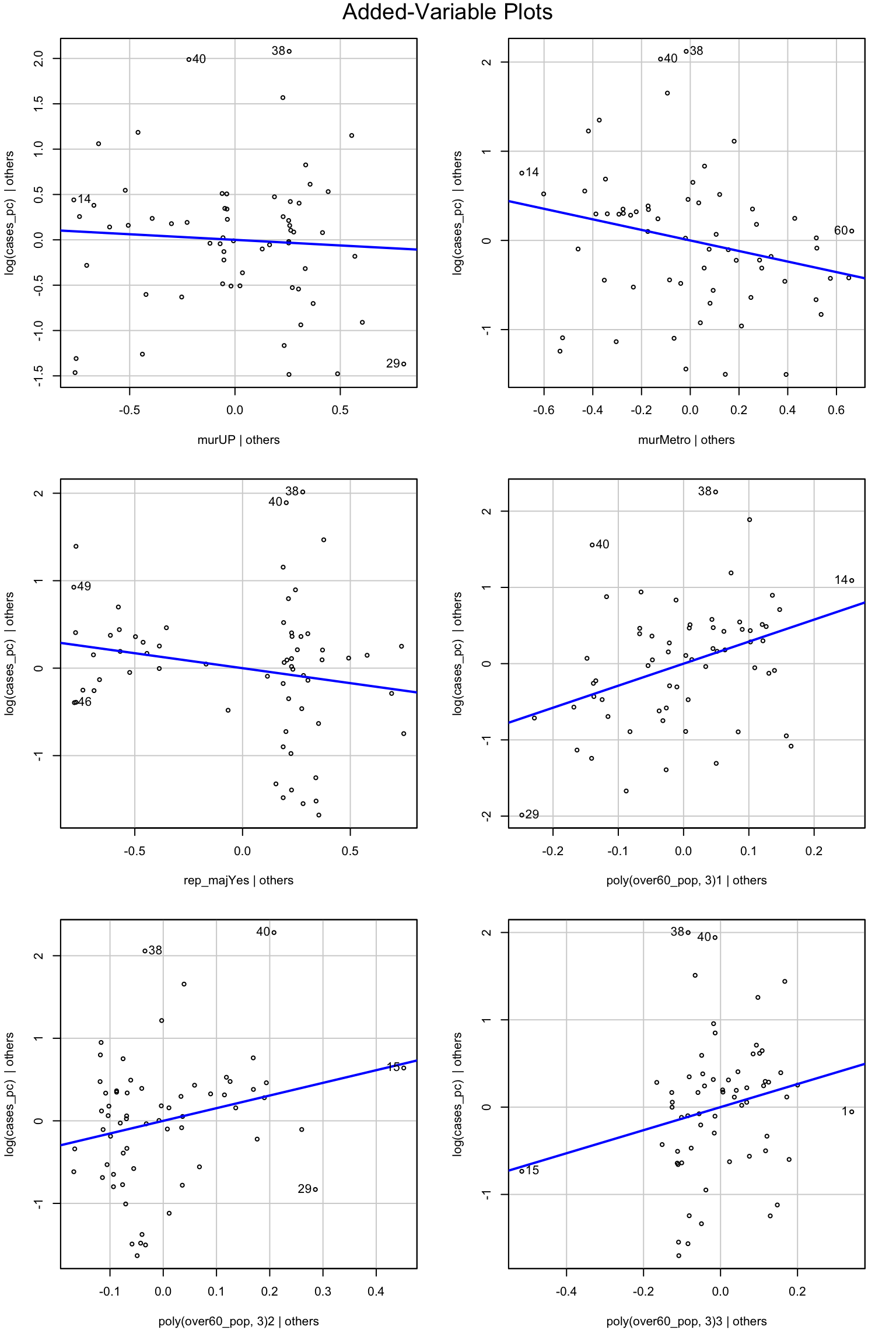

Added-variable plots (also called partial-regression plots) provide a more useful method of assessing joint influence. These plots essentially show the partial relationships between \(Y\) and each \(X\). Let \(Y_{i}^{(1)}\) represent the residuals from the least-squares regression of \(Y\) on all of the \(X\)’s except for \(X_1\):

\[Y_{i} = A^{(1)} + B_{2}^{(1)}X_{i2} + \cdots + B_{k}^{(1)}X_{ik} + Y_{i}^{(1)}\]

Similarly, \(X_{i}^{(1)}\) are the residuals from the regression of \(X_{1}\) on all the other \(X\)’s

\[X_{i1} = C^{(1)} + D_{2}^{(1)}X_{i2} + \cdots + D_{k}^{(1)}X_{ik} + X_{i}^{(1)}\]

These two equations determine the residuals \(X^{(1)}\) and \(Y^{(1)}\) as parts of \(X_{1}\) and \(Y\) that remain when the effects of \(X_{2}, \ldots, X_{k}\) are removed. The Residuals \(Y^{(1)}\) and \(X^{(1)}\) have the following properties:

- Slope of the regression of \(Y^{(1)}\) on \(X^{(1)}\) is the least-squares slope \(B_1\) from the full multiple regression

- Residuals from the regression of \(Y^{(1)}\) on \(X^{(1)}\) are the same as the residuals from the full regression: \(Y_{i}^{(1)} = B_{1}X_{i1}^{(1)} + E_{i}\)

- Variation of \(X^{(1)}\) is the conditional variance of \(X_1\) holding the other \(X\)’s constant. Consequently, except for the df the standard error from the partial simple regression is the same as the multiple regression SE of \(B_1\).

We can make these in R with avPlots()

avPlots(mod2b)

The only thing that really stands out as a potential problem is that for the poly(over60_pop, 3)1|others figure, observations 14 and 29 are both working to increase the regression slope. Observation 15 looks quite influential in the other to polynomial terms.

You Try It!

Use the methods discussed above to evaluate potential outliers and influential data in the model you’ve estimated.

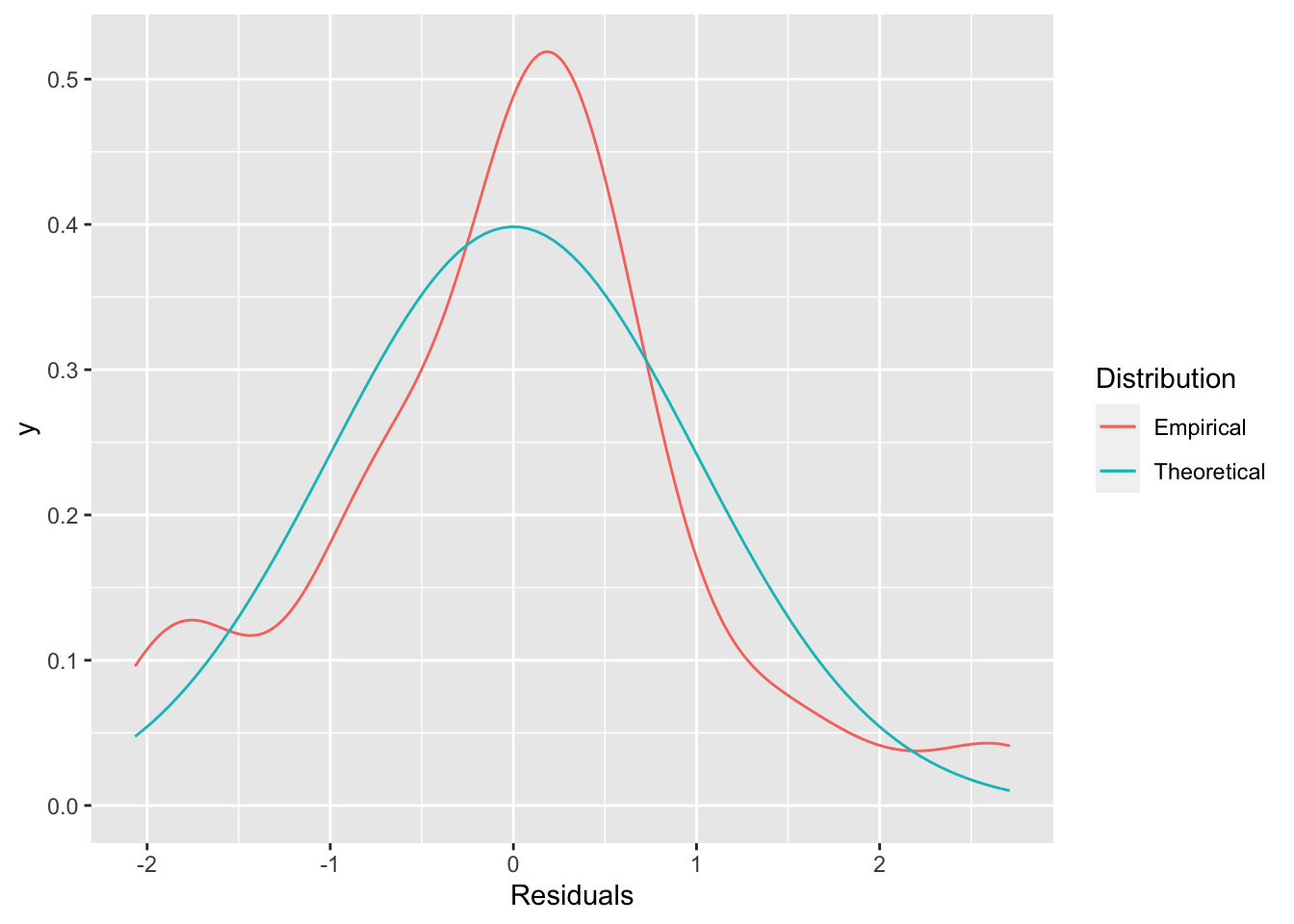

3.5 Diagnostics for Normality

Diagnostics for normality are slightly less plentiful, but there are a couple of options. First, we could simply look at the distribution of the residuals and compare that to a normal distribution.

ggplot(aug2b, aes(x=.std.resid)) +

stat_density(geom="line", aes(color="Empirical")) +

stat_function(aes(color="Theoretical"),

fun=dnorm,

args=list(sd = sd(aug2b$.std.resid)),

geom="line") +

labs(x="Residuals",

color = "Distribution")

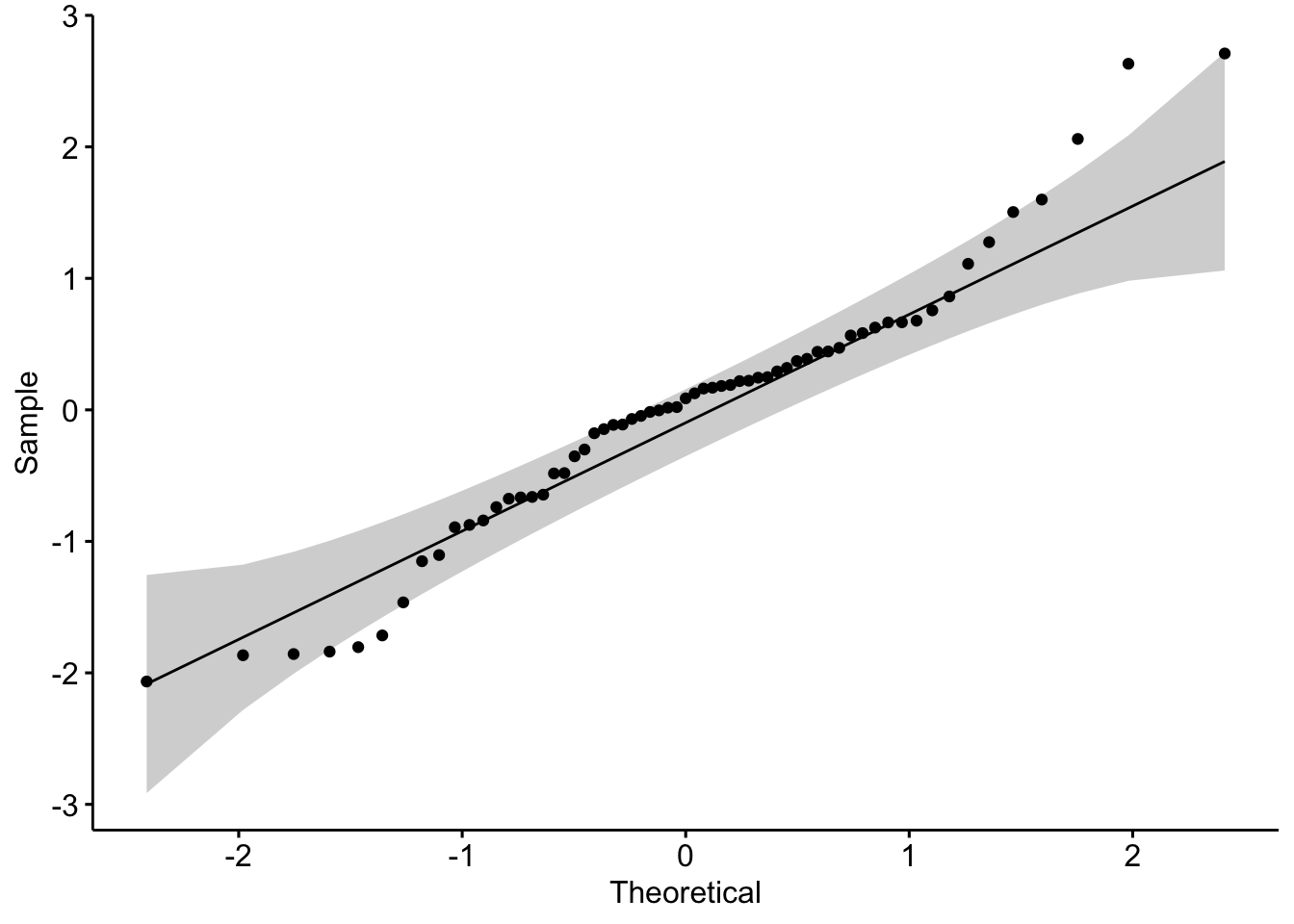

The distribution above doesn’t look all that normal. If we wanted a sense of how not normal it looks, we could look at a quantile-quantile plot. We’ll use the one in the ggpubr package.

library(ggpubr)

ggqqplot(aug2b$.std.resid) We see that there are some points that fall outside of the confidence bounds. That’s not ideal for us. We could also do a formal test. The Shapiro-Wilk’s test is a good option here.

We see that there are some points that fall outside of the confidence bounds. That’s not ideal for us. We could also do a formal test. The Shapiro-Wilk’s test is a good option here.

shapiro.test(aug2b$.std.resid)##

## Shapiro-Wilk normality test

##

## data: aug2b$.std.resid

## W = 0.96143, p-value = 0.04601The null hypothesis here is normality, so we reject the null hypothesis. This is all the more interesting because these tests are known to have low power in small samples, so that fact that we reject the null with 63 observations is quite remarkable.

You Try It!

Are the residuals from your model normally distributed?

You’ll note that when we started, we were using the log of cases. It might be that there is a better normalizing transformation than the log. We could consider that with the powerTransform() function

summary(powerTransform(mod2b, family="bcPower"))## bcPower Transformation to Normality

## Est Power Rounded Pwr Wald Lwr Bnd Wald Upr Bnd

## Y1 1.0429 1 0.342 1.7437

##

## Likelihood ratio test that transformation parameter is equal to 0

## (log transformation)

## LRT df pval

## LR test, lambda = (0) 8.768555 1 0.0030647

##

## Likelihood ratio test that no transformation is needed

## LRT df pval

## LR test, lambda = (1) 0.01438813 1 0.90452This suggests that no transformation is needed in addition to the log.

3.5.1 Bootstrapping Regression Models

One way to deal with non-normality (if non-normality of the errors is the only problem) is to use the bootstrap. The “wild bootstrap” is known to handle problems with heteroskedasticity appropriately. We can use the wild.boot() function in the lmboot package to accomplish this.

library(lmboot)

w <- wild.boot(formula(mod2b), B = 2500, data=colo_dat)We could calculate percentile confidence intervals for the parameters in the model by summarising the bootEstParam element of the w object.

library(tibble)

bs.ci <- w$bootEstParam %>%

as.data.frame %>%

summarise(across(everything(), ~ quantile(.x, probs=c(.025,.975)))) %>%

t() %>%

as_tibble(., .name_repair="minimal")

names(bs.ci) <- c("lower", "upper")

bs.ci <- bs.ci %>%

mutate(est = c(w$origEstParam),

param = names(coef(mod2b)))For comparison, we could make the original and HC5 confidence intervals, too:

orig.ci <- as_tibble(confint(mod2b),

.name_repair="minimal")

names(orig.ci) <- c("lower", "upper")

orig.ci <- orig.ci %>%

mutate(est = coef(mod2b),

param = names(coef(mod2b)))

hc5t <- coeftest(mod2b, vcov.=vcovHC,

type="HC5")

hc5.ci <- as_tibble(confint(hc5t),

.name_repair="minimal")

names(hc5.ci) <- c("lower", "upper")

hc5.ci <- hc5.ci %>%

mutate(est = coef(mod2b),

param = names(coef(mod2b)))Now, we could just look at them, but it’s probably more interesting to make a graph. To do that, we’ll have to combine everything together after making a flag in each dataset for which model the confidence intervals come from.

all.ci <- bind_rows(orig.ci, hc5.ci, bs.ci)

all.ci <- all.ci %>%

mutate(type=factor(rep(1:3, each=7),

labels=c("Raw", "HC5", "BS")))Now, we could make the graph

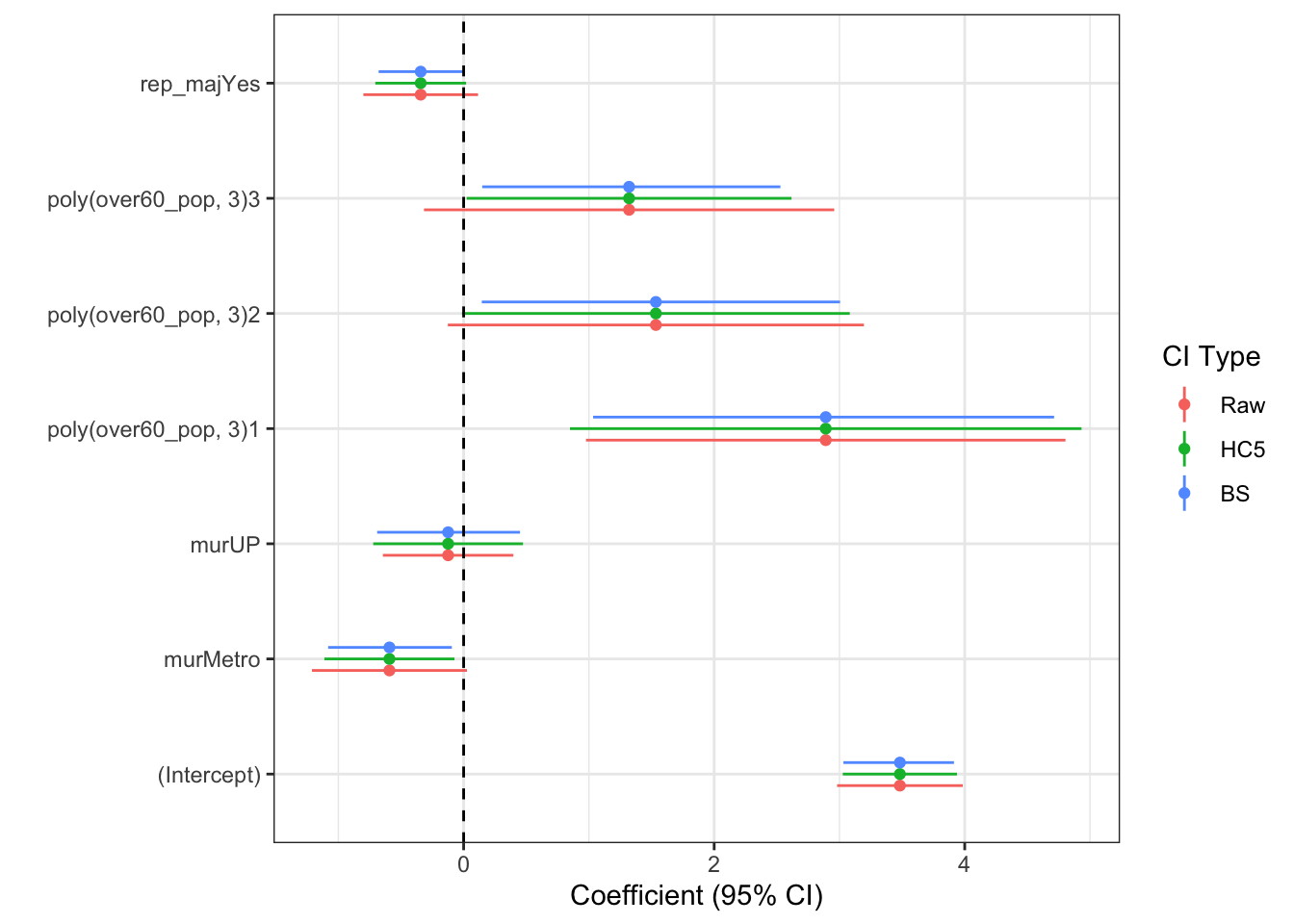

ggplot(all.ci, aes(y=est, x=param, colour=type)) +

geom_point(position = position_dodge(width=.3)) +

geom_linerange(aes(ymin=lower, ymax=upper),

position= position_dodge(width=.3)) +

geom_hline(yintercept=0, lty=2) +

theme_bw() +

labs(x = "",

y="Coefficient (95% CI)",

colour = "CI Type") +

coord_flip()

You Try It!

What do you find if you bootstrap your model instead of using robust standard errors?

3.6 Interactions

We have addressed most of the main issues covered in basic linear model discussions, but we have yet to discuss interactions. Let’s start by talking about interactions between categorical variables and continuous variables.

Below, we’re going to create a new variable that is a Hispanic dummy variable identifying counties with Hispanic populations greater than \(15\%\). Then, we’ll add an interaction between over60_pop and hisp_dum. In R, we do this simply by putting an asterisk between the two terms:

colo_dat <- colo_dat %>%

mutate(hisp_dum = factor(hisp_pop > .15,

levels=c(TRUE, FALSE),

labels=c("High", "Low")))

mod3 <- lm(log(cases_pc) ~ hisp_dum*over60_pop + rep_maj, data=colo_dat)

summary(mod3)##

## Call:

## lm(formula = log(cases_pc) ~ hisp_dum * over60_pop + rep_maj,

## data = colo_dat)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.71197 -0.48239 0.08658 0.53275 1.94316

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -19.037 7.401 -2.572 0.01269 *

## hisp_dumLow 24.683 10.155 2.431 0.01819 *

## over60_pop 35.512 11.559 3.072 0.00323 **

## rep_majYes -0.512 0.225 -2.275 0.02661 *

## hisp_dumLow:over60_pop -39.423 16.009 -2.463 0.01679 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.7923 on 58 degrees of freedom

## Multiple R-squared: 0.2707, Adjusted R-squared: 0.2204

## F-statistic: 5.381 on 4 and 58 DF, p-value: 0.0009371From the regression output, we see that the interaction of the dummy and continuous variables is significant. If the categorical variable had had more than two levels, we would have needed to look at the Anova() output to see whether the interaction was significant. This means that the effect of over60_pop is significantly different in high Hispanic population counties versus low Hispanic population counties (as defined above). The converse is also true - the effect of being a Hispanic county changes as a function of the population over 60. We’ll investigate how those to things work below.

The intQualQuant() function in the DAMisc package allows us to evaluate interactions between quantitative and qualitative variables. Specifying type='slopes' and plot=FALSE will give you all of the simple slopes, the conditional partial effects of the continuous variable given different values of the categorical variable.

library(DAMisc)

intQualQuant(mod3, c("hisp_dum", "over60_pop"), type="slopes", plot=FALSE)## Conditional Effect of over60_pop given hisp_dum

## eff se tstat pvalue

## High 35.5124 11.5591 3.0723 0.003

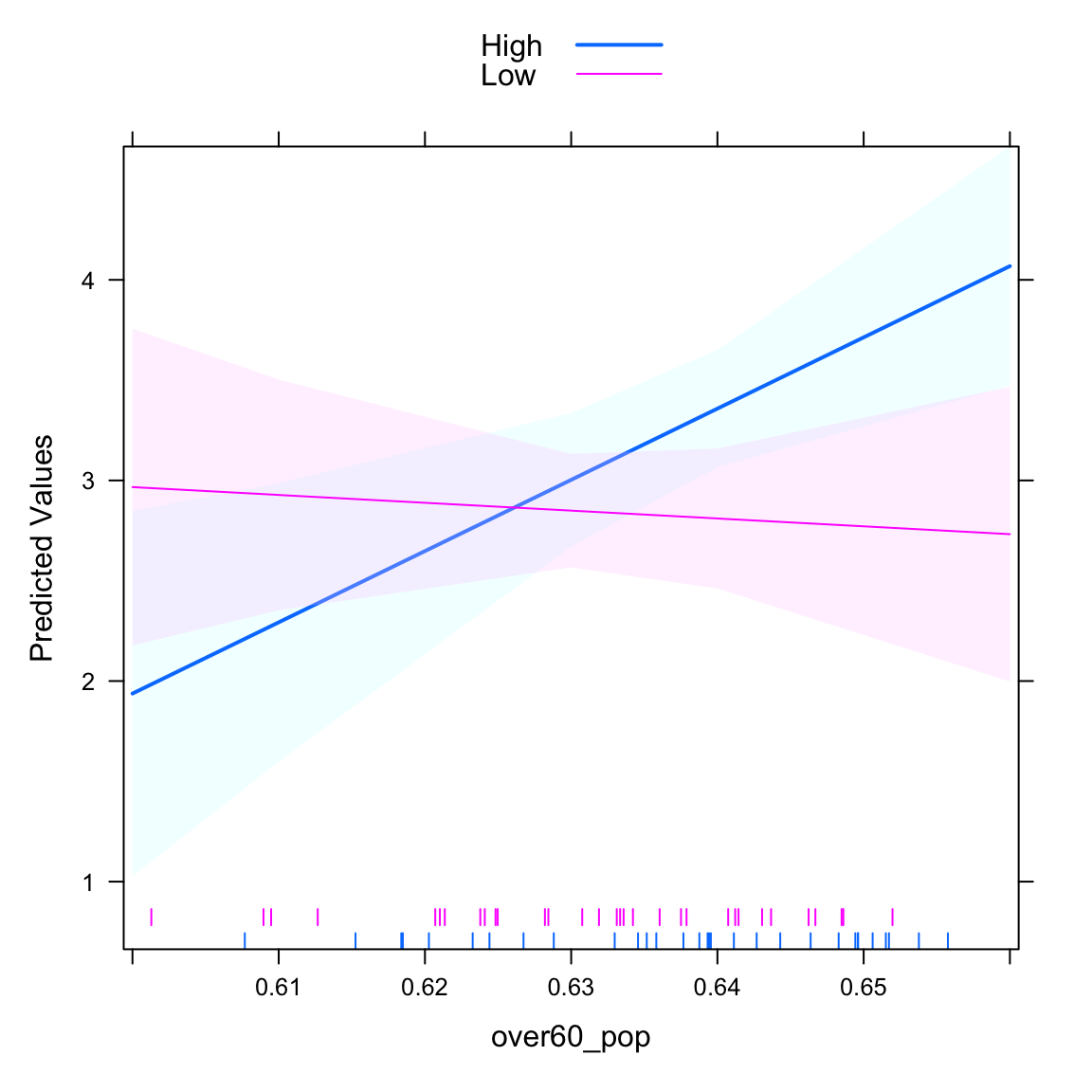

## Low -3.9106 11.7936 -0.3316 0.741If we set plot=TRUE, we see the two lines that give the effect of over60_pop for each of the hisp_dum conditions. The rug plot gives the distribution of over60_pop for each of the groups on hisp_dum. The benefit of the rug plot is to identify places where inferences are plausible and where they are not.

intQualQuant(mod3, c("hisp_dum", "over60_pop"), type="slopes", plot=TRUE) We can also plot the “other side” of the interaction. It basically identifies the difference between the two lines for every different value of

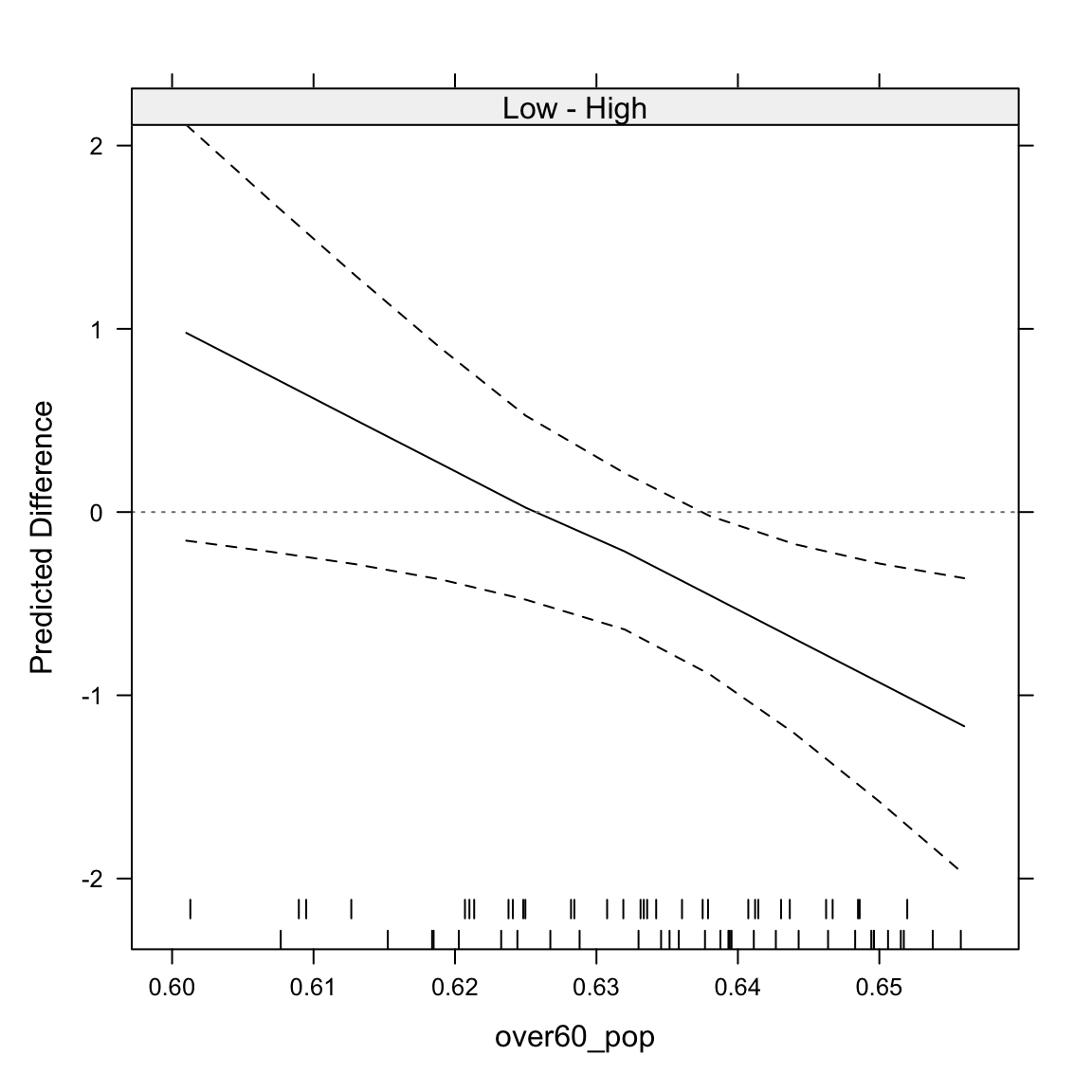

We can also plot the “other side” of the interaction. It basically identifies the difference between the two lines for every different value of over60_pop. We get this by setting type="facs". Here, we see that the difference between high and low Hispanic population counties becomes statistically significant at around 0.64, which is the 36.5 percentile of the over60_pop variable.

intQualQuant(mod3, c("hisp_dum", "over60_pop"), type="facs", plot=TRUE)

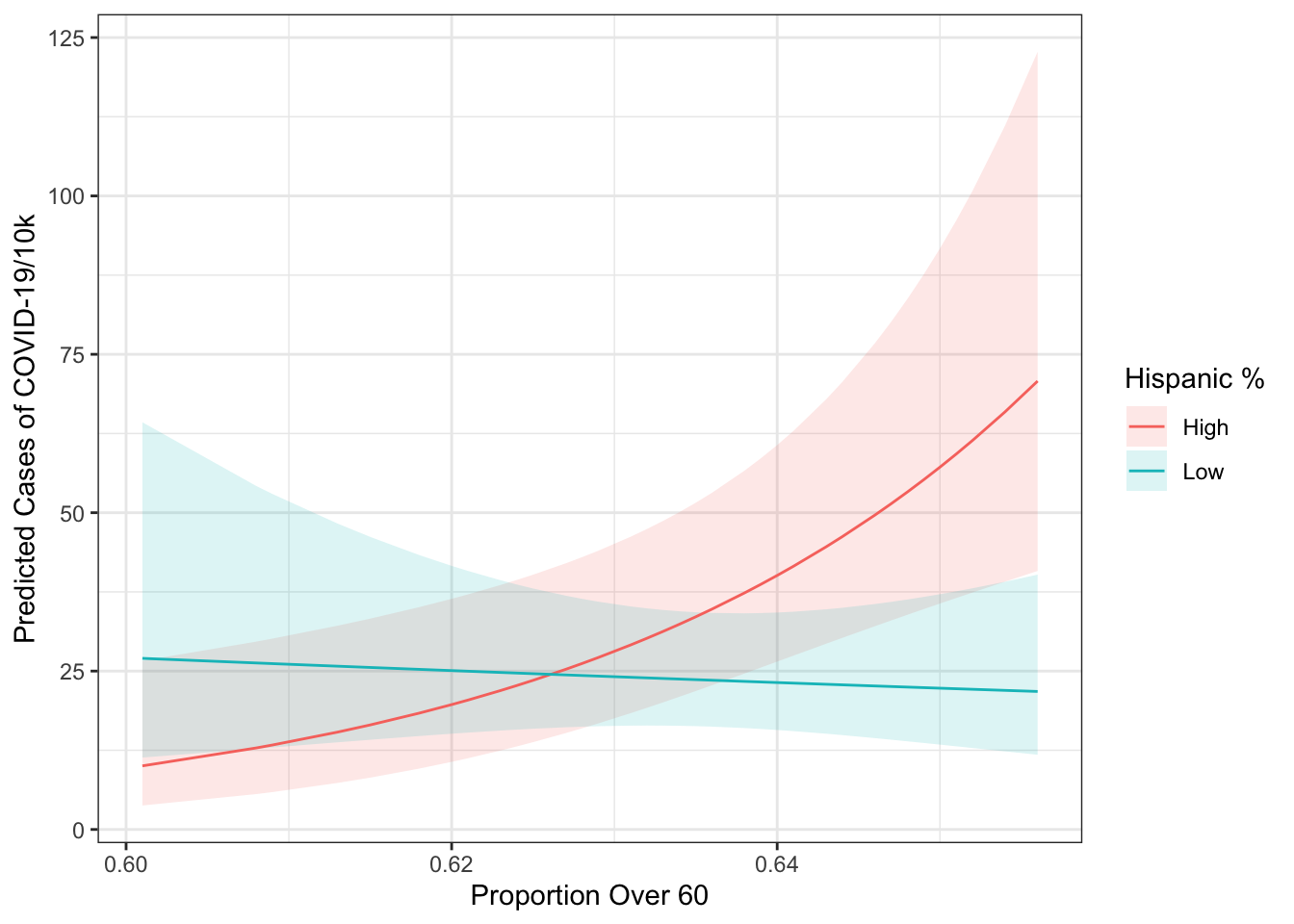

To see how the interaction works out on the un-transformed cases variable, we’re best off using the ggpredict() function.

ggpredict(mod3, terms=c("over60_pop [all]", "hisp_dum")) %>%

ggplot(aes(x=x, y=predicted,

colour=group, fill=group)) +

geom_ribbon(aes(ymin=conf.low, ymax=conf.high),

alpha=.15,

col="transparent") +

geom_line() +

theme_bw() +

labs(x="Proportion Over 60",

y="Predicted Cases of COVID-19/10k",

colour="Hispanic %",

fill="Hispanic %")

You Try It!

Add to your model an interaction of tax and sei10.

- What does the interaction say about the conditional nature of this relationship.

We can also look at two continuous variable interactions. Here, we switch back to an interaction of hisp_pop and over60_pop. Because the interaction term is significant.

mod4 <- lm(log(cases_pc) ~ hisp_pop*over60_pop + rep_maj, data=colo_dat)

summary(mod4)##

## Call:

## lm(formula = log(cases_pc) ~ hisp_pop * over60_pop + rep_maj,

## data = colo_dat)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.6587 -0.5764 0.0231 0.4838 2.1313

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.8018 9.3880 0.725 0.4717

## hisp_pop -72.6391 36.5385 -1.988 0.0515 .

## over60_pop -5.7823 14.8279 -0.390 0.6980

## rep_majYes -0.4678 0.2284 -2.048 0.0451 *

## hisp_pop:over60_pop 115.8929 57.8284 2.004 0.0497 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.8163 on 58 degrees of freedom

## Multiple R-squared: 0.2258, Adjusted R-squared: 0.1724

## F-statistic: 4.229 on 4 and 58 DF, p-value: 0.004516The main way of looking at these effects is through a so-called marginal effects plot. This plots the effect of one variable against the values of the other variable. The DAintfun2() function from the DAMisc package does this for us.

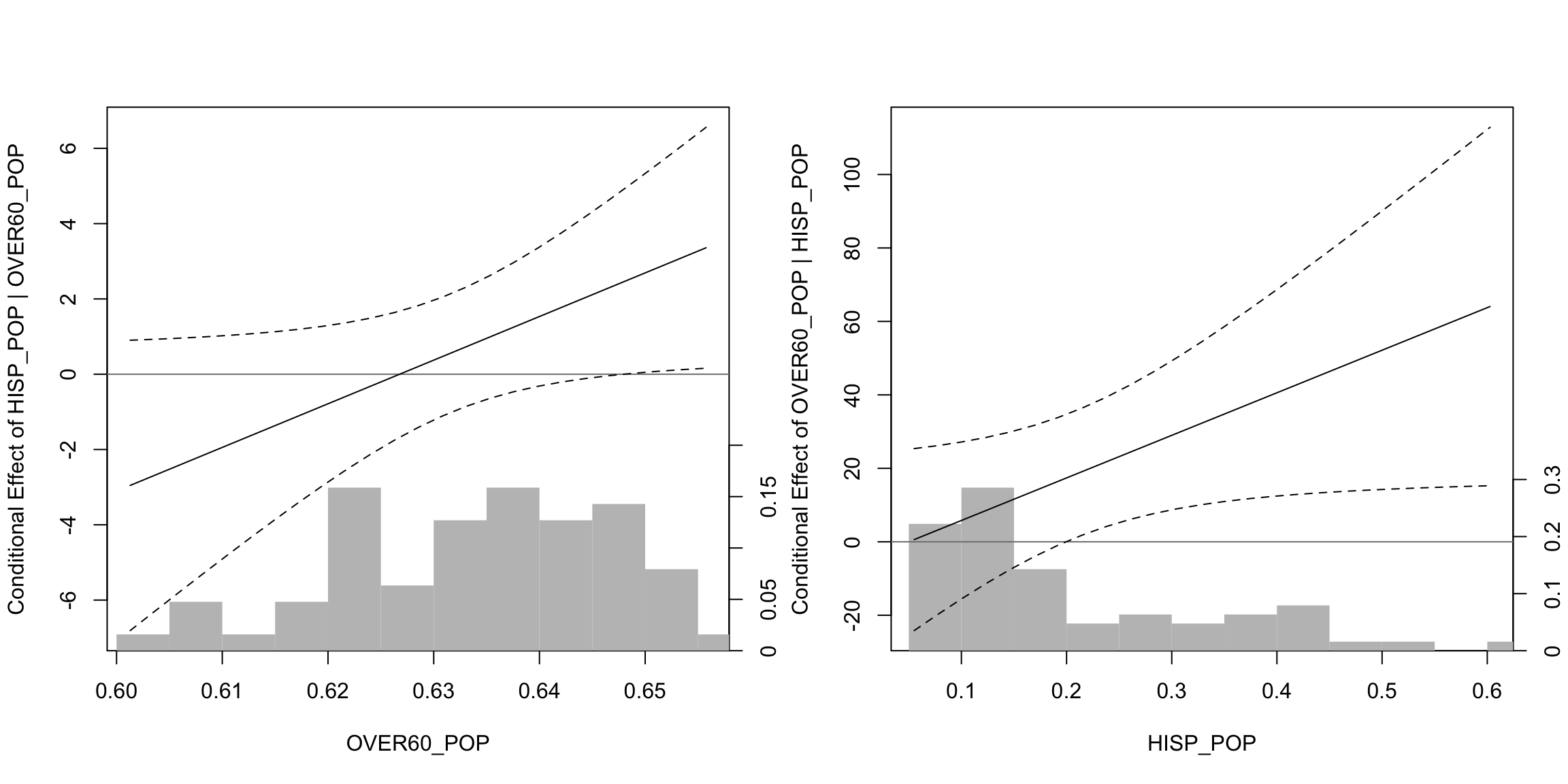

DAintfun2(mod4, c("hisp_pop", "over60_pop"),

rug=FALSE,

hist=TRUE,

scale.hist = .3)

Here, we see that the effect of hisp_pop is only significant and positive when over60_pop takes on values above around 0.645 (more precise estimates of this below). On the other hand (in the right-hand panel), we see that over60_pop is significant when hisp_pop takes on values bigger than about 0.2. If we wanted to figure out where those values are exactly, we could use the changeSig() function (also in the DAMisc package).

changeSig(mod4, c("over60_pop", "hisp_pop"))## LB for B(over60_pop | hisp_pop) = 0 when hisp_pop=0.1993 (65th pctile)

## UB for B(over60_pop | hisp_pop) = 0 when hisp_pop=-134.1685 (< Minimum Value in Data)

## LB for B(hisp_pop | over60_pop) = 0 when over60_pop=0.648 (81th pctile)

## UB for B(hisp_pop | over60_pop) = 0 when over60_pop=-3.553 (< Minimum Value in Data)We were pretty close with the visual inspection, but the results from changeSig() are more precise.

You Try It!

Now, do the following:

- Make a new variable loginc which is the log of realinc and replace log(realinc) with loginc in your model.

- Instead of an interaction between tax and sei10, use an interaction between sei10 and loginc.

- Evaluate the interaction.